Looking back on the 10 months before a programming beginner becomes a Kaggle Expert

Since I became a Kaggle Expert, I decided to write a poem. I hope it will be helpful for people who are interested in AI but can't even program it.

1. Initial specifications (February 2019)

- Master's degree in mechanical engineering. Frequent mechanical engineer

- I honestly don't like linear algebra and statistics so much. I am good at mechanics.

- AI? That's right. Shogi is a super strong guy, isn't it?

- Python? Is it like a boxer on Street Fighter II?

2. Current specifications (December 2019)

- Kaggle Expert (subtle?)

- Decent grades in machine learning competitions (Kaggle Kuzushiji Recognition 7th, Signate tellus 3rd 5th)

- Machine learning: Somehow I can code.

- Deep learning: I can code somehow.

- You can read related papers. Simple things can be implemented.

- Business application is also visible

3. How did you learn machine learning?

I myself had a year of "I didn't get any results! I couldn't get it !!", and I decided to do something new. In fact, a little ** the world expands **. Learning machine learning is recommended for engineers who are doing the same thing every day or who are becoming a copy of their boss **.

4. Learning flow

First, the overall flow is shown in the figure.

Except for the first month and the end, I only practice the competition.

4.1 Getting Started (First Month)

Coursera Machine Learning Machine learning course by Dr. Andrew Ng. You can learn most of the basics. It's definitely better to do this than to take any paid course. it's recommended.

- The explanation is polite and easy to understand. It praises me insanely.

- I am worried about programming tasks moderately, so I will acquire it moderately

- You can do it anywhere. You can watch the video on the train.

- But it's free!

Somehow ** at the end of the game, just looking at the teacher's face will give you a sense of security **: relaxed:

Deep learning x Python implementation book

Due to my lack of knowledge of Python and the fact that Coursera's free courses did not include deep learning, I ran a book appropriately. I learned how to calculate matrices in Python (especially numpy), how to make simple diagrams, and the super basics of deep learning. Personally, I recommend the system that moves your hands. In addition, I said "[First deep learning -Neural network and back propagation learned with Python-](https://www.amazon.co.jp/%E3%81%AF%E3%81%98%E3%" 82% 81% E3% 81% A6% E3% 81% AE% E3% 83% 87% E3% 82% A3% E3% 83% BC% E3% 83% 97% E3% 83% A9% E3% 83% BC% E3% 83% 8B% E3% 83% B3% E3% 82% B0-Python% E3% 81% A7% E5% AD% A6% E3% 81% B6% E3% 83% 8B% E3% 83% A5% E3% 83% BC% E3% 83% A9% E3% 83% AB% E3% 83% 8D% E3% 83% 83% E3% 83% 88% E3% 83% AF% E3% 83% BC% E3% 82% AF% E3% 81% A8% E3% 83% 90% E3% 83% 83% E3% 82% AF% E3% 83% 97% E3% 83% AD% E3% 83% 91% E3% 82% B2% E3% 83% BC% E3% 82% B7% E3% 83% A7% E3% 83% B3-Machine-Learning-% E6% 88% 91% E5% A6% BB / dp / 4797396814) " was.

4.2 Kaggle debut (1st and 2nd month)

When I learned that there was a data science competition called Kaggle, I immediately registered thinking, "I think it's great to be able to combine study and profit (prize money)!"

I was shocked by the super-thankful fact that the solution is shared. But when machine learning & programs that aren't even obvious are written in English, laymen are a little weeping: fearful:

At that time, there was a competition in which various Japanese people, including Qiita, were helping out. Yes, it ’s Titanic.

Titanic competition

This is a competition that is always held in Kaggle. It is a competition that predicts who will survive among the people (various people of different ages, genders, and status) who board the Titanic.

It was my first time to do anything, so it was painful just to manipulate the table data. Of course, it's the first time for pandas. However, you can experience the whole process of data cleansing, feature analysis, model building, validation, and inference. ** ** I learned a lot because there is a lot of information on the net. We also implemented decision trees and random forests for the first time. I don't think there was any loss in doing it.

"I see, I understand machine learning," he said, and immediately jumped into a prize-money competition.

Kaggle Electric Wire Competition

For me as a mecha shop, the failure analysis system was an easy subject to get started with. It was an interesting subject in terms of time-series data that I often see.

For me, who saw RNN and GBDT for the first time, it was already a battle of gods. I feel like yamcha.

I copied the public solution (kernel) without hesitation. As you submit it while playing with it little by little, the code that belonged to someone else will gradually become familiar. (However, as you add it, the code becomes dirty and the score does not change much.) ** At first, all you have to do is copy and squeeze it **, I'm sure.

I knew the result, but it was super Zako. But thanks to the kernel, I learned a lot from python coding to time series data analysis. Thank you to everyone who gave us the kernel.

Kaggle Earthquake Competition

I was in a good mood to "master the time series", and I will participate in the competition of the same series that was held immediately after chance. ** This time, I tried to reach the submit without looking at the public solution at all. ** **

Through worries, trial and error, you will gain a great deal of experience in researching and achieving yourself. You can never get it with a passive attitude (just reading a book). After all, I felt that learning in competition was wonderful and good.

After that, I participated in the Machine Learning Contest for Manufacturers Sponsored by Information Services International-Dentsu (ISID) and won the fluke. This was also time series data. There may be this year as well, so if you are interested, please do.

4.3 And to deep learning (3-4 months)

I've become interested in deep learning, especially image-related. That's because the lunch boxes I asked for regularly at home were so bad that I could tell them even with AI. I thought ** was the trigger.

Bento presentation evaluation

I took a picture of the lunch box when I messed up. My daughter-in-law looks suspiciously at me, who takes pictures by moving the arrangement. Karaage goes over and over here.

I took CNN (Convolutional Neural Network) seriously for the first time. I learned transfer learning because there weren't many images. Of course, I don't have a GPU, so I will use ** Google Colab (*) **.

It was a little more accurate, but it was just a mysterious evaluation system that gave me the same score. I was tired of the fact that Google Drive was full of bento images, so I decided to quit. ** It was a failure. ** Again ** A solid goal and purpose is important. ** **

-

- The best free Google service to say the least. Deep learning is possible with a browser *

Signate Tobacco Competition-First Object Detection-

I learned that there is a domestic competition platform called Signate, and apparently an image-related competition was being held, so I participated.

** A competition to hit the brand of cigarettes displayed on the shelves. ** This is the first object detection task. I thought it was a perfect task for me to say that I had mastered transfer learning.

But what about object detection? YOLO? SSD? What is that state? After a little research, I found out that YOLO has up to v3. It seems that it is also quite good. Fortunately the implementation was rolling so I could easily see the code.

However, the content is sloppy, and the person who wrote it seems to be only a god. It can't be helped, so this time too, it's ** almost round plagiarism ** at first. However, as I play around with it, it gradually becomes my code. (And it's getting more and more dirty code like shit: poop :) At this point, you will be able to read a little paper. Fortunately, YOLO was fulfilling, so I read a lot.

But after all it was Zako, so I can't win a prize. However, since I became interested in object detection around this time, I continued to read papers.

** I understand everything, but I think there is a considerable difference between "I don't know" and "I know", and there is a big gap between "I can do it". I think proper reading and implementation is important. ** **

In addition, since Signate often cannot share the solution, I personally recommend Kaggle for debut.

https://signate.jp/competitions/159#abstract

https://signate.jp/competitions/159#abstract

4.4 Introduction to table competition (4th-5th months)

I realized the importance of machine specs in the image competition and was tired of the bad learning iteration, so I challenged the table competition. Although I had a bit of a chronological competition, I had no experience with ordinary table competitions.

Around that time, Signate will be hosting an interesting competition.

Iida Sangyo Land Competition

This is a very basic competition that predicts the prices of land properties in Saitama.

I was able to learn the joy of creating various features, such as calculating the time distance from longitude and latitude, and creating the area per room from the number of rooms. It's quite a habit when the features that suddenly flash are effective for accuracy **. I think that an idea man who is good at various thoughts and delusions is very suitable for such a place. Substances in the brain are released.

I was able to learn more about the importance of validation. I didn't know much when Dr. Andrew told me at Coursera, but when evaluated in terms of accuracy, poor validation creates contradictions. I think this is also a great piece of knowledge that can be gained in the competition.

By the way, it comes with a bonus that you can become familiar with Saitama (in vain).

I also secretly participated in the molecular competition, but I didn't have much time after all. GNN seems to be fun, so learning is a future task.

4.5 Image competition rekindled (6-9 months)

I've been studying detection tasks since the tobacco competition, and a Japan-sponsored competition was just held at Kaggle.

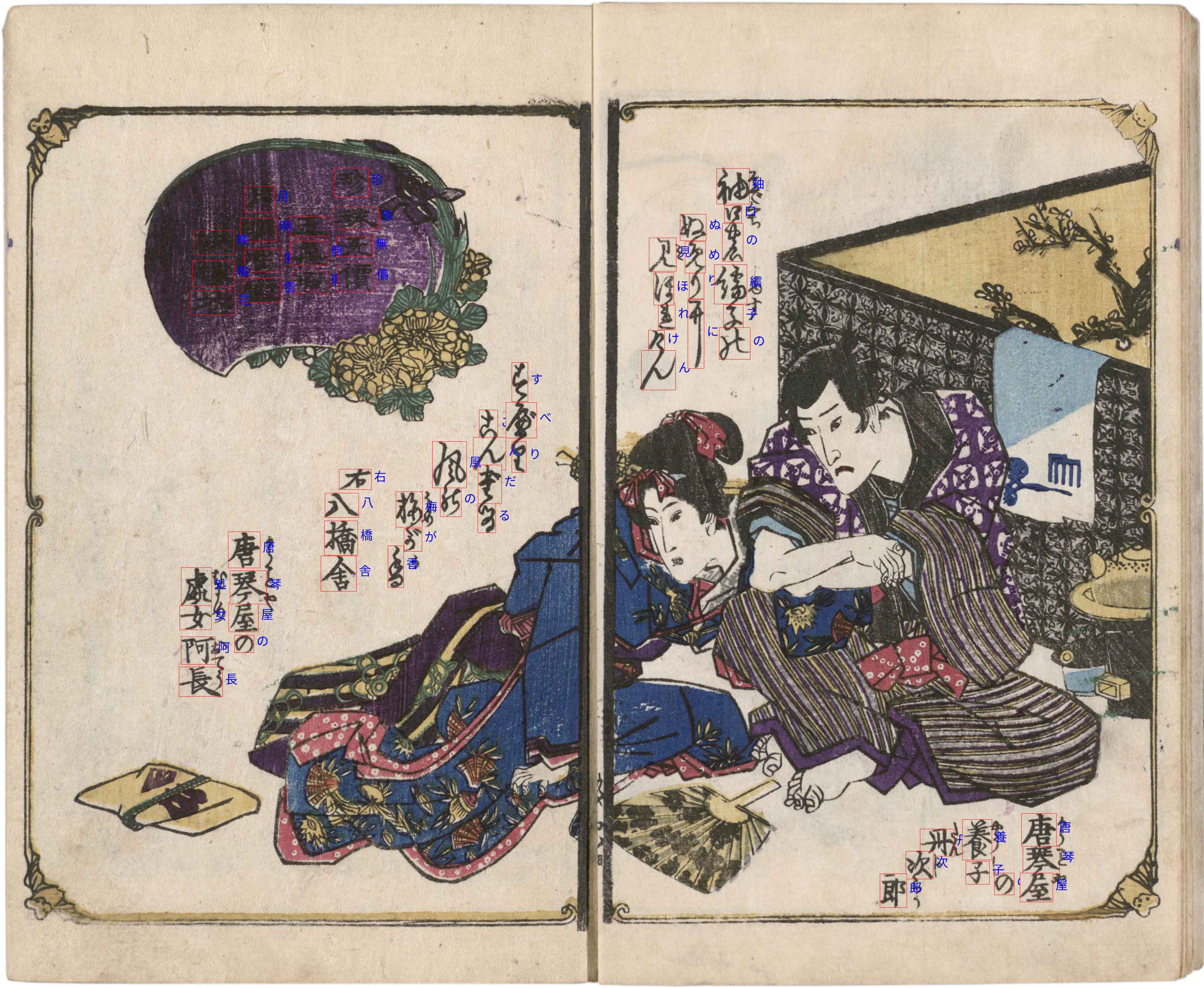

Kaggle Breaking Character Competition

It was a task to detect and classify broken characters. The detection itself was not a very hard task, so I tried to implement it myself as much as possible. By this time, I was able to implement the dissertation for a while, so I actively tried new ones.

In addition to thanking kaggle for their help, ** We also shared the solution with the kernel. **

I faced the most decent convolutional neural network so far. If you are aware of the points you want to show, it seems that the neural network will also be seen properly. For the first time, I was made to think seriously about the influence of context. Looking at The elephant in the room, I found the fun of CNN. Interesting was a big driving force, and we went through a lot of trial and error.

The importance of validation learned in the land competition also came to life. When documents of the same author were mixed in training and validation, the accuracy was overestimated. Group Split was the correct answer.

It was a Kaggle competition, but because it was a small competition without medals, I was miraculously ranked 7th.

Unfortunately, it is out of the prize money area, but I was able to receive the award, which led to my motivation.

https://www.kaggle.com/c/kuzushiji-recognition

https://www.kaggle.com/c/kuzushiji-recognition

Signate Sea Ice Competition

** If you can do a discovery task, you can also do a segmentation task **. (I think modeling is more troublesome for the former) I participated in the sea ice competition held at Signate just right. The sea ice area is identified by looking at the high-resolution measurement images acquired by satellite.

** By this time, I was able to read papers to some extent and implement them **, so I played with loss functions and wrote the latest models such as EfficientNet.

Although it was 6 subs, I was in 5th place for the time being. I was caught up in the Russians (?) That flowed in at the end of the game, so I am out of the prize pool this time as well. Unfortunately, due to Signate's regulations, the solution cannot be shared: frowning2:

https://signate.jp/competitions/183

https://signate.jp/competitions/183

5 Summary

** So, machine learning is fun, so let's do it together: relaxed: **

- Even people who are unrelated to software are okay! (It is important to have a brain that can consider "why why" etc.)

- Even an amateur can become a Matomo a little after a year!

- We recommend competitions that handle actual data and provide learning through practice.

- We recommend a competition where you can enjoy the fun of being up and down in ranking and accuracy.

6 Finally

I heard that I became a Kaggle Expert, but when I looked closely, there were no competition medals. I'm sorry Iki.

Recommended Posts