Building an HPC learning environment using Docker Compose (C, Python, Fortran)

I've always wanted to study HPC (High Performance Computing) once I got a GPU. However, building an environment is a very tedious task (in many ways). When I was wondering if I could easily build a learning environment, I came up with the idea of building an environment using Docker and Docker Compose. In this article, we will build a C language-based library for HPC such as CUDA and OpenCL, and a container environment that can execute PyCUDA and PyOpenCL that realize HPC with Python.

final goals

Build an HPC-related library development environment on the container using Docker. Also, set the port open so that you can board with SSH. This is done to realize an environment where you can get into the container with SSH and develop by utilizing the function of Remote Development Extension of VS Code.

Library to be introduced

List the libraries to be installed. Of these, libraries other than OpenCL, PyCUDA, and PyOpenCL install the ones included in the NVIDIA HPC SDK.

| name | language | Remarks |

|---|---|---|

| CUDA | C | CUDA default version C-based proprietary extension |

| CUDA | Fortran[^fortran] | NVIDIA official compiler nvfortran released |

| OpenCL | C | Parallel computing library called CUDA and the two giants |

| PyCUDA | Python | Wrapper for running CUDA from Python |

| PyOpenCL | Python | Wrapper for running OpenCL from Python |

| OpenMP | C | Library that can easily utilize multi-core CPU |

| OpenACC | C | Library for parallel computing using GPU |

Environment construction using Docker Composee

Create Dockerfile and docker-compose.yml to be used for environment construction as follows. Docker Compose is originally an application that is convenient for building an environment that starts multiple containers and builds a network between containers, but I think that I often use it like Make. The Dockerfile was created with reference to the contents of some past official container images. Since cuDNN, PyCUDA, OpenCL, and PyOpenCL are not included in the NVIDIA HPC SDK, we have adopted the method of installing based on the official container image of CUDA 10.1 version. Also, the login password can be set by passing it as an argument at build time so that you can log in to the container with SSH.

2020/9/21 postscript: In this construction, the environment is constructed for the purpose of performing remote SSH login from the final client terminal and using it as a development environment. Therefore, if you do not perform the operation to access from the client terminal, it is not necessary to describe the SSH setting part (# Setup SSH part) at the end of the Dockerfile.

Dockerfile

FROM nvidia/cuda:10.1-cudnn7-devel

ARG PASSWD

ENV CUDA_VERSION=10.1

# Upgrade OS

RUN apt update && apt upgrade -y

# Install some ruquirements

RUN apt install -y bash-completion build-essential gfortran vim wget git openssh-server python3-pip

# Install NVIDA HPC SDK

RUN wget https://developer.download.nvidia.com/hpc-sdk/nvhpc-20-7_20.7_amd64.deb \

https://developer.download.nvidia.com/hpc-sdk/nvhpc-2020_20.7_amd64.deb \

https://developer.download.nvidia.com/hpc-sdk/nvhpc-20-7-cuda-multi_20.7_amd64.deb

RUN apt install -y ./nvhpc-20-7_20.7_amd64.deb ./nvhpc-2020_20.7_amd64.deb ./nvhpc-20-7-cuda-multi_20.7_amd64.deb

# Install cuDNN

ENV CUDNN_VERSION=7.6.5.32

RUN apt install -y --no-install-recommends libcudnn7=$CUDNN_VERSION-1+cuda$CUDA_VERSION && \

apt-mark hold libcudnn7 && rm -rf /var/lib/apt/lists/*

# Install OpenCL

RUN apt update && apt install -y --no-install-recommends ocl-icd-opencl-dev && rm -rf /var/lib/apt/lists/*

RUN ln -fs /usr/bin/python3 /usr/bin/python

RUN ln -fs /usr/bin/pip3 /usr/bin/pip

ENV PATH=/usr/local/cuda-$CUDA_VERSION/bin:$PATH

ENV CPATH=/usr/local/cuda-$CUDA_VERSION/include:$CPATH

ENV LIBRARY_PATH=/usr/local/cuda-$CUDA_VERSION/lib64:$LIBRARY_PATH

# Install PyCUDA

RUN pip install pycuda

# Install PyOpenCL

RUN pip install pyopencl

# Setup SSH

RUN mkdir /var/run/sshd

# Set "root" as root's password

RUN echo 'root:'${PASSWD} | chpasswd

RUN sed -i 's/#PermitRootLogin prohibit-password/PermitRootLogin yes/' /etc/ssh/sshd_config

RUN sed -i 's/#PasswordAuthetication/PasswordAuthetication/' /etc/ssh/sshd_config

RUN sed 's@session\s*required\s*pam_loginuid.so@session optional pam_loginuid.so@g' -i /etc/pam.d/sshd

EXPOSE 22

CMD ["/usr/sbin/sshd", "-D"]

docker-compose.yml

version: "2.4"

services:

hpc_env:

build:

context: .

dockerfile: Dockerfile

args:

- PASSWD=${PASSWD}

runtime: nvidia

ports:

- '12345:22'

environment:

- NVIDIA_VISIBLE_DEVICES=all

- NVIDIA_DRIVER_CAPABILITIES=all

volumes:

- ../work:/root/work

restart: always

Build image

Create a container image from the Dockerfile. It is not desirable that the password remains in .bash_history, so pass the content read from the external file (auth.txt: the password you want to set in the file) as an argument. The shell file that describes the command operation is shown below.

build_env.sh

#!/bin/bash

cat auth.txt | xargs -n 1 sh -c 'docker-compose build --build-arg PASSWD=$0'

Run build

Just run the shell file. The process in the middle is omitted, but if the message "Successfully ..." is output at the end, it is successful. Apt may end with an error in the setup of cuDNN on the way, but you can build it by trying again several times. (Detailed reason unknown)

$ ./build_env.sh

...

...

Successfully tagged building-hpc-env_hpc_env:latest

Start container

All you have to do is start the container. There is a warning that the PASSWD value is not set, but it is a temporary value used at build time, so you can safely ignore it.

$ docker-compose up -d

WARNING: The PASSWD variable is not set. Defaulting to a blank string.

Creating network "building-hpc-env_default" with the default driver

Creating building-hpc-env_hpc_env_1 ... done

Confirmation of connection to container

Check if you can connect with SSH. I was able to log in with my password safely.

2020/9/21 postscript: Here, the connection is confirmed with localhost,

ssh -p 12345 root@<REMOTE_IP_ADDRESS>

But you can connect. You can connect using Remote Development Extension from VS Code on the client.

$ ssh -p 12345 root@localhost

root@localhost's password:

Welcome to Ubuntu 18.04.5 LTS (GNU/Linux 5.4.0-45-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

This system has been minimized by removing packages and content that are

not required on a system that users do not log into.

To restore this content, you can run the 'unminimize' command.

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

root@bd12d0a840e6:~#

Bonus 1: Cooperation with VS Code

I won't describe the detailed construction method, but you can study HPC from VS Code as follows by using the extension function called Remote Development Extension of VS Code. (The figure shows the cuf file)

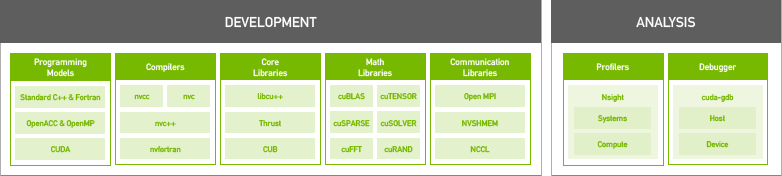

Bonus 2: NVIDIA HPC SDK

The library and compiler for High Performance Computing released by NVIDIA in August 2020 are collectively provided as a development environment. Technical support is available for a fee, but it's free if you just use the library or compiler. In the past, the CUDA Fortran compiler required a third-party compiler called the PGI compiler, but with the NVIDIA compiler, CUDA can be used in both C and Fortran. Below is a list of the compilers included. It's a place like a selection.

/opt/nvidia/hpc_sdk/Linux_x86_64/20.7/compilers/The inside of the bin looks like a selection

# ls

addlocalrc jide-common.jar ncu nvaccelinfo nvcudainit nvprof pgaccelinfo pgf77 pgsize tools

balloontip.jar jide-dock.jar nsight-sys nvc nvdecode nvsize pgc++ pgf90 pgunzip

cuda-gdb llvmversionrc nsys nvc++ nvextract nvunzip pgcc pgf95 pgzip

cudarc localrc nv-nsight-cu-cli nvcc nvfortran nvzip pgcpuid pgfortran rcfiles

ganymed-ssh2-build251.jar makelocalrc nvaccelerror nvcpuid nvprepro pgaccelerror pgcudainit pgprepro rsyntaxtextarea.jar

Summary

Development of library for HPC, which is complicated and troublesome to build an environment By using Docker, we have made it easy and hassle-free. I have several HPC technical books at hand, so I will use them for my studies.

Reference

- CUDA10.2 Dockerfile

- cuDNN Dockerfile

- OpenCL Dockerfile

- Installing PyCUDA on Ubuntu Linux

- Keep the Docker container running

- NVIDIA HPC SDK

- NVIDIA HPC SDK Version 20.7 Downloads

- About HPC Compilers of NVIDIA HPC SDK

- How to make a container that can log in with SSH (Ubuntu 18 compatible version)

[^ fortran]: I decided to make it compatible with CUDA Fortran because I would like my friends who handle Fortran to use it.