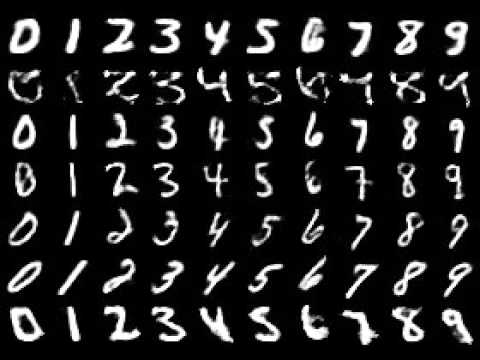

Model generated by Variational Autoencoder (VAE)

This article is the 13th day of Machine Learning Advent Calender. Let's summarize the results of trying the generative model with Variational Autoencoder.

Tell me what the theory is like!

-Infer the latent variable Z from the test data X( Z|X ), X based on Z and label Y|Z,It is to regenerate Y. ――I think it's interesting that you can input an arbitrary latent variable and generate an X with label Y in the representation of that latent variable.

For example, please watch this video.

This is the result of an experiment that generated data with labels 0-9 by randomly inputting arbitrary latent variables to the trained model. It's familiar MNIST data, but it's not cut out from the dataset, it's generated by VAE. By using the latent variable obtained for a certain input, it is possible to generate label data similar to that input.

For example, such an image.

The number on the far left is an image taken from MNIST and is used as input. Images from 0 to 9 are used as input X, and the latent variables Z obtained from them are used to generate X | Z, Y. You can see that the image from 0 to 9 is obtained by imitating the style of the input image (thick lines, how to make a curve, etc.). When I read the paper, I first misunderstood, but the images from 0 to 9 are not the images of similar style brought from MNIST, but the image data generated by NN. It's pretty interesting, isn't it?

Internal theory

――I thought I'd write it, but it became awkward to write the formula, and it will be 14 days in 15 minutes, so if you are interested, please read the dissertation! http://papers.nips.cc/paper/5352-semi-supervised-learning-with-deep-generative-models.pdf

--As an implementation example, I will put the one implemented by chainer here. However, since it is not maintained, it will not work with the latest version of chainer. .. .. Thankfully, there was a person who wrote how to deal with it, so please try this article. .. .. Pururiku is welcome. https://github.com/RyotaKatoh/chainer-Variational-AutoEncoder

Possible applications

--Generation of data set for supervised learning (semi-supervised learning) --Legal law. --Generation of a new image that imitates the training data set --By entering an arbitrary value in the hidden layer, you can generate a new image while maintaining the space learned to some extent. ――But in this field, I feel that methods such as DeepDream and Stylenet are interesting. --Application to time series data --Example of generating new music from music learned by Variational Recurrent Autoencoder. (I tried it before, but it didn't give very interesting results.) ――In addition to music, there are various uses such as document generation and motion capture data.

Good reason

――Actually, I wanted to read another paper or try it with Tensorflow, but I couldn't take the time, and the tea became muddy like this. (The VAE experiment was done around August.) ――I want to learn time management techniques.

reference

- http://deeplearning.jp/wp-content/uploads/2014/04/dl_hacks2015-04-21-iwasawa1.pdf

- http://www.slideshare.net/beam2d/semisupervised-learning-with-deep-generative-models