[RUBY] Control the Linux trackpad

Trackpad gestures not working on Linux desktops

Linux Desktop is far behind the trackpad support compared to Mac and Windows.

Mac trackpad gestures are now fairly citizenship, and natural scrolling, which moves the trackpad in the opposite direction of your finger, is now available for a variety of operating systems, including Linux.

Even in Windows, the handling of gestures has been strengthened compared to Windows 10, and although it is not perfect, it is possible to operate like a Macbook.

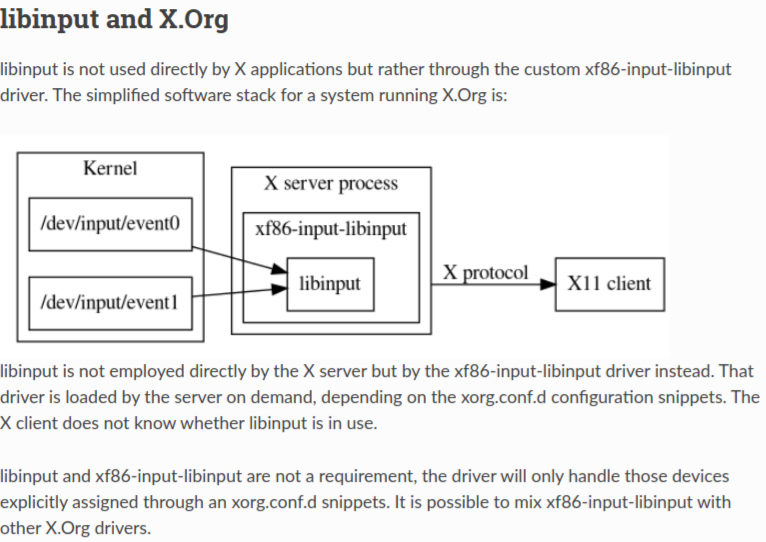

Previously, Synaptics (which is easily mistaken for GUI package manager Synaptic) was used to control devices such as Linux trackpads and mice, but in recent years Ubuntu has been using Wayland-derived libinput since around 15.04. In addition, synaptics will shift to maintenance mode from now on and will not be actively developed.

You can use libinput in Xorg even if you're not in Wayland. On Ubuntu, if you are running on Xorg instead of Wayland, you are using libinput instead of synaptics by using xf86-input-libinput.

https://wayland.freedesktop.org/libinput/doc/latest/what-is-libinput.html#libinput-and-x-org

https://wayland.freedesktop.org/libinput/doc/latest/what-is-libinput.html#libinput-and-x-org

Also, the latest Ubuntu 19.10 added gesture support to Gnome (Wayland), but it's still inadequate.

Control the trackpad to handle gestures

What can I do to be able to handle gestures?

- Get the trackpad input

- Determine the gesture from the input value

- Linked to GUI operation of workspace and browser

Consider each of these factors.

Read device input

Install libinput-tools to read trackpad input. https://wayland.freedesktop.org/libinput/doc/latest/tools.html

$ sudo apt-get install libinput-tools

libinput debug-events spits out device input information to standard output.

-event2 DEVICE_ADDED Sleep Button seat0 default group5 cap:k

-event17 DEVICE_ADDED DLL075B:01 06CB:76AF Touchpad seat0 default group7 cap:pg size 101x57mm tap(dl off) left scroll-nat scroll-2fg-edge click-buttonareas-clickfinger dwt-on

-event4 DEVICE_ADDED AT Translated Set 2 keyboard seat0 default group13 cap:k

event17 POINTER_MOTION +1.17s 1.22/ 0.00

event17 POINTER_MOTION +1.18s 1.22/ 1.22

event17 POINTER_MOTION +1.18s 1.22/ 0.00

-event4 KEYBOARD_KEY +2.56s *** (-1) pressed

event4 KEYBOARD_KEY +2.67s *** (-1) released

You can get device connection information, pointer movement information on the trackpad, and keyboard input (masked).

Also, if you swipe with three fingers, you will get the following results.

-event17 GESTURE_SWIPE_BEGIN +296.35s 3

event17 GESTURE_SWIPE_UPDATE +296.35s 3 6.14/-0.28 (24.06/-1.09 unaccelerated)

event17 GESTURE_SWIPE_UPDATE +296.36s 3 8.06/-0.81 (21.87/-2.19 unaccelerated)

event17 GESTURE_SWIPE_UPDATE +296.36s 3 7.71/-0.81 (20.78/-2.19 unaccelerated)

event17 GESTURE_SWIPE_UPDATE +296.37s 3 6.09/-0.81 (16.40/-2.19 unaccelerated)

event17 GESTURE_SWIPE_UPDATE +296.38s 3 4.06/-0.41 (10.94/-1.09 unaccelerated)

event17 GESTURE_SWIPE_UPDATE +296.38s 3 6.49/-0.81 (17.50/-2.19 unaccelerated)

event17 GESTURE_SWIPE_UPDATE +296.39s 3 4.06/-0.41 (10.94/-1.09 unaccelerated)

event17 GESTURE_SWIPE_UPDATE +296.40s 3 2.84/ 0.00 ( 7.66/ 0.00 unaccelerated)

event17 GESTURE_SWIPE_END +296.41s 3

One line of GESTURE_SWIPE_UPDATE +296.40s 3 2.84 / 0.00 contains the gesture type, number of fingers, and distance traveled.

You can easily get device events, swipe events, pinch zoom and other events by using libinput-debug-events. (There is also a C API for libinput, but this is not used this time)

In addition, in synaptics of Ubuntu 14.10 or earlier, you can display the trackpad log with the "synclient -m" command, but there is no output such as swipe event, and in the latest synclient, the -m option has been deleted, so you can not get the event. There is.

Extract gestures from libinput debug-devents

GESTURE_SWIPE_UPDATE +296.40s 3 2.84/ 0.00

If this one line is treated as an event and the average value of the movement distances of the events of multiple lines is acquired, it is possible to determine which one is moving. GESTURE_SWIPE_END It would be nice to get the average value of the distance traveled before coming.

By the way, if you calculate, the average value of the movement amount is x = 5.68125, y = -0.5425, which means that it is moving to the right.

You can see that he swiped three fingers to the right.

Assign gestures to GUI operations

Once you know you've swiped, what's next to make the gesture look like you're manipulating the GUI? If you swipe, I want the screen to move quickly. The easiest thing to do is to call an existing shortcut key.

Xdotool is convenient for calling shortcut keys. xdotool can emulate keyboard input and mouse operation. http://manpages.ubuntu.com/manpages/trusty/man1/xdotool.1.html

There are usually shortcut keys for moving Workspace, so if you express these with xdotool commands,

- Move previous workspace

$ xdotool key ctrl + alt + Up - Move to next workspace

$ xdotool key ctrl + alt + Down

Also, the operation of the browser is the same.

- Browser back

$ xdotool key alt + Left - Browser advance

$ xdotool key alt + Right

In this way, commands can be used to emulate workspace operations and browser GUI operations. (Actually, you can get intuitive feedback by moving the workspace in synchronization with the movement distance of the gesture in pixel units, but since it depends on the implementation on the WM and application side, this time it will be enough to emulate the shortcut key. )

Write event-driven programs

Basically, the following contents are simply executed while looping sequentially.

Read device input, determine gestures, and call command (xdotool). You just have to keep looping these.

I made Fusuma (https://github.com/iberianpig/fusuma), a software made by Ruby that recognizes gestures from libinput. I've also written a perl script for synaptic (https://github.com/iberianpig/xSwipe) before. Including it, this is the second work.

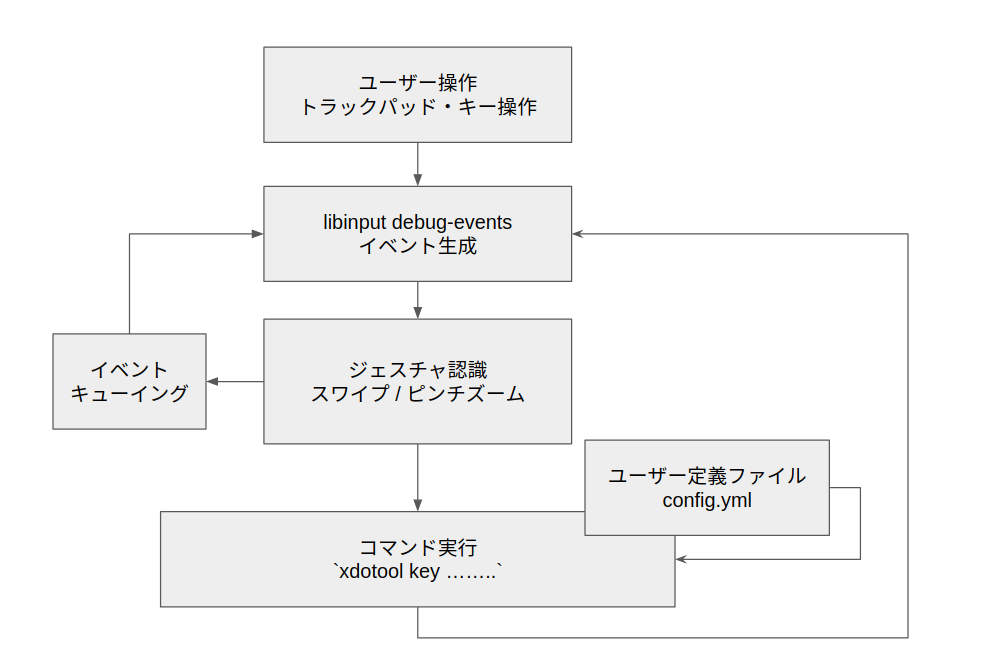

When raised in the figure, it looks like the following.

It does something like event-driven programming and loops the following for user operations.

- Process the generated events of libinput-debug-events in a loop

- Event dispatcher for swipe, pinch, etc. waits

- Queue the event until it can be dispatched and return to 1.

- Event handler gets the command corresponding to the gesture from the configuration file

- Execute the acquired command and return to 1.

Fusuma running demo

It's a running screen capture, so I can't see it. Please install it and give it a try.

If you can write Ruby, the dispatcher and event handler parts can also be extended with Gem, so you can also get tap, stylus, and keyboard events, so you should be able to do various things. I wrote a dispatcher that recognizes "key press + swipe" events.

Gestures can't be let go

It has become indispensable in everyday life, and it depends on the degree of trouble when Fusuma is turned off, such as switching workspaces, returning to the browser, and advancing.

In the future, if it is Wayland, if you write an extension of Gnome Shell, you can receive events with javascript, so the threshold seems to be relatively low.

I realized that if I could handle device input freely, I could create various useful functions. If you can pick up the event, you can link it to various actions like this time, so I feel like I can make hand gestures, face gestures, etc. with a webcam. Notification that you have a bad posture. Don't you need it?

I would like to continue to find familiar and minor issues and solve them steadily.

Recommended Posts