I implemented Cousera's logistic regression in Python

Cousera --I implemented the logistic regression of Dr. Andrew Ng's Machine Learning Week3. I tried using only numpy as much as possible.

The customary magic.

%matplotlib inline

import numpy as np

import matplotlib.pyplot as plt

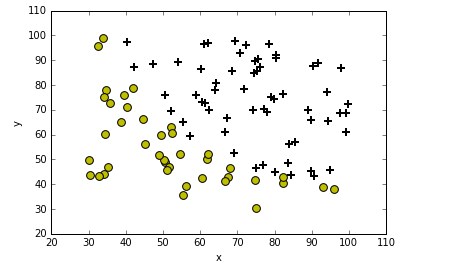

Read the data and plot it.

def plotData(data):

neg = data[:,2] == 0

pos = data[:,2] == 1

plt.scatter(data[pos][:,0], data[pos][:,1], marker='+', c='k', s=60, linewidth=2)

plt.scatter(data[neg][:,0], data[neg][:,1], c='y', s=60)

plt.xlabel('x')

plt.ylabel('y')

plt.legend(frameon= True, fancybox = True)

plt.show()

data = np.loadtxt('ex2data1.txt', delimiter=',')

plotData(data)

Next, implement the cost function and sigmoid function

Sigmoid function

Cost function

def sigmoid(z):

return(1 / (1 + np.exp(-z)))

def CostFunction(theta, X, y):

m = len(y)

h = sigmoid(X.dot(theta))

j = -1*(1/m)*(np.log(h).T.dot(y)+np.log(1-h).T.dot(1-y))

return j

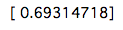

Let's look at the first cost.

X = np.c_[np.ones((data.shape[0],1)), data[:,0:2]]

y = np.c_[data[:,2]]

initial_theta = np.zeros(X.shape[1])

cost = CostFunction(initial_theta, X, y)

print(cost)

The cost is like this. Next is the implementation of the steepest descent method.

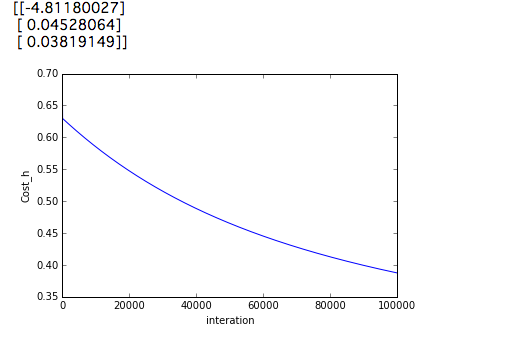

def gradient_decent (theta, X, y, alpha = 0.001, num_iters = 100000):

m = len(y)

history = np.zeros(num_iters)

for inter in np.arange(num_iters):

h = sigmoid(X.dot(theta))

theta = theta - alpha *(1/m)*(X.T.dot(h-y))

history[inter] = CostFunction(theta,X,y)

return(theta, history)

initial_theta = np.zeros(X.shape[1])

theta = initial_theta.reshape(-1,1)

cost = CostFunction(initial_theta,X,y)

theta, Cost_h= gradient_decent(theta, X, y)

print(theta)

plt.plot(Cost_h)

plt.ylabel('Cost_h')

plt.xlabel('interation')

plt.show()

The result of executing it is as follows.

For some reason I get better results than setting num_iters to 10000000. Let's see the result.

def predict(theta, X, threshold = 0.5):

p = sigmoid(X.dot(theta)) >= threshold

return(p.astype('int'))

p = predict(theta,X)

y = y.astype('int')

accuracy_cnt = 0

for i in range(len(y)):

if p[i,0] == y[i,0]:

accuracy_cnt +=1

print(accuracy_cnt/len(y) * 100)

Running the above code will give you 91.0%. So what if you run num_inter at 1000000? ..

If you check this accuracy, it will be 89.0%. It is unclear why the above results are worse, despite the lower costs.

reference

https://github.com/JWarmenhoven/Coursera-Machine-Learning

Recommended Posts