A supplement to "Camera Calibration" in OpenCV-Python Tutorials

The supplement to OpenCV-Python Tutorials "Camera Calibration" has been added, so I rewrote it as a separate article.

** Meaning of camera matrix **

[fx, 0, cx; 0, fy, cy; 0, 0, 1] It is easy to overlook the fact that the unit of focal lengths fx and fy is pixel. The camera-centered (cx, cy) [pixel] does not always match half the image size. It only matches if the camera is properly facing the front. The axis in front of the camera is not always orthogonal to the axis connecting the two cameras of the stereo camera. Therefore, the position of the vanishing point when the subject moves away from the camera is different from the center of the image. (Cx, cy) in the center of the camera is the coordinates of its vanishing point.

When calibrated with a stereo camera The difference in the camera center positions of the left and right cameras indicates that the orientations of the two cameras are not slightly parallel.

(cx-half the width of the image) / fx is It seems that it is tan (θx) of the deviation angle θx of the direction of the camera.

(I'm not an expert, so I'm sorry if I make a mistake.)

When doing stereo matching, after making a stereo camera module It is to confirm by performing calibration + distortion correction for the stereo pair. By doing so, you can visually check to what extent the distortion correction is successful. In areas where distortion correction is not successful, the parallax values will systematically shift and will not return the correct values.

Scripts in (opencv_3 directory) \ sources \ samples \ python

calibrate.py This is a proofreading + distortion correction script. cv2.calibrateCamera() cv2.getOptimalNewCameraMatrix() cv2.undistort()

Located in (opencv_2 directory) \ sources \ samples \ python2 calibrate.py This is a script that only calibrates and does not correct distortion.

cv2.calibrateCamera()

Stereo Rectification example tom5760/stereo_vision

stereo_match.py Calculates stereo matching from the image after stereo parallelization, It produces parallax and converts distance. In it, the parallax is converted to 3D coordinates as follows.

python

Q = np.float32([[1, 0, 0, -0.5*w],

[0,-1, 0, 0.5*h], # turn points 180 deg around x-axis,

[0, 0, 0, -f], # so that y-axis looks up

[0, 0, 1, 0]])

points = cv2.reprojectImageTo3D(disp, Q)

Its spatial coordinates are easily converted to files with a .ply extension,

Resulting .ply file cam be easily viewed using MeshLab ( http://meshlab.sourceforge.net/ )

Since it is written, it can be displayed in 3D with MeshLab as follows.

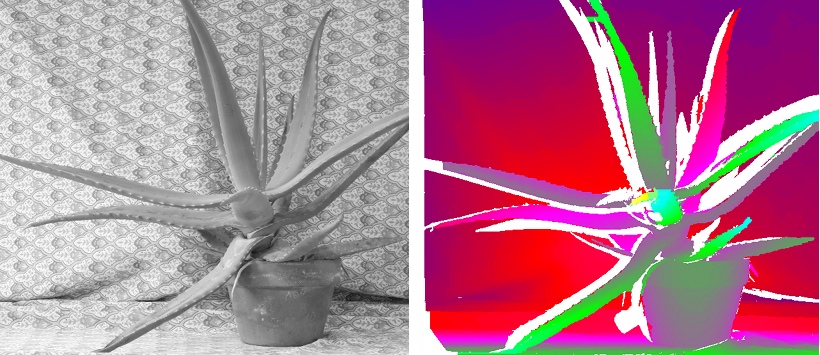

The figure shows the 3D coordinates generated in stereo_match.py displayed in MeshLab.

It was hard to notice that the cloth behind the was having a depth change like this 3D display.

It was hard to notice that the cloth behind the was having a depth change like this 3D display.

There is a discovery that 3D display is performed with MeshLab etc. in this way.

The conversion to the ply file format for that is included in the python script above. The code below is the code of the part that outputs the 3D data (both the 3D coordinates of each point and the color) for that purpose. It's very easy to write because it uses numpy's savetxt ().

python

import numpy as np

import cv2

ply_header = '''ply

format ascii 1.0

element vertex %(vert_num)d

property float x

property float y

property float z

property uchar red

property uchar green

property uchar blue

end_header

'''

def write_ply(fn, verts, colors):

verts = verts.reshape(-1, 3)

colors = colors.reshape(-1, 3)

verts = np.hstack([verts, colors])

with open(fn, 'w') as f:

f.write(ply_header % dict(vert_num=len(verts)))

np.savetxt(f, verts, '%f %f %f %d %d %d')

When calculating the parallax, specify the minimum parallax value and the number of searches so that the parallax is searched only within a certain range of the arrangement and the object. With that ・ It is less likely to give wrong parallax. ・ Calculation time is reduced. It leads to.

** Related sample programs in OpenCV-C ++ (stereo system / camera calibration system) **

List of OpenCV 2.4.0 sample sources (samples / cpp) https://github.com/YusukeSuzuki/opencv_sample_list_jp/blob/master/samples_cpp.rst It is also useful to read a sample program related to stereo in C ++ from. 3calibration.cpp calibration.cpp calibration_artificial.cpp stereo_calib.cpp stereo_match.cpp

The parallax value detected in this way tends to be affected by noise. In OpenCV3.1, the influence of the noise can be reduced by using the following function. cv::ximgproc::DisparityWLSFilter Class Reference Note that OpenCV3.1 has a different function interface from the OpenCV2.4 system, but it is worth a try.

** For fisheye camera **

Calibration of fisheye camera with OpenCV

Use the calibration function cv :: fisheye :: calibrate () for the fisheye camera to get the camera parameters. The function description is on the following page. http://docs.opencv.org/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html#fisheye-calibrate

I found in the [python documentation server] that the fisheye camera calibration is not included in cv2. In that case, give up processing in Python and code in C ++.

Only stereo parallelization requires the namespace functionality of cv :: fisheye. After stereo parallelization, all you have to do is derive the parallax image as before.

The following article contains the code for the fisheye case. OpenCV camera calibration sample code

Omnidirectional Camera Calibration With an omnidirectional camera that has a wider angle than a fisheye camera, stereo parallelization becomes more difficult.

YouTube OpenCV GSoC 2015 Omnidirectional Camera Calibration

“Omnidirectional Cameras Calibration and Stereo 3D Reconstruction” – opencv_contrib/ccalib module (Baisheng Lai, Bo Li)

Davide Scaramuzza Omnidirectional Camera pdf

There are various methods for fisheye cameras because the relational expression between R and θ is different. Please refer to the following URL. You have to check which of the following multiple methods is supported by the source code in opencv_contrib.

Equidistant Projection Equisolid Angle Projection Orthographic Projection

Difference in projection method

[Conventional fisheye lens (H180) Newly studied fisheye lens (TY180)] (http://www.mvision.co.jp/mcm320fish.html)

[Design Wave MAGAZINE] September 2008 issue [Magazine]](http://books.rakuten.co.jp/rb/7848928/) [Priority project] Research on fisheye lenses that have begun to be installed in cars -Comparison of various projection methods and mutual conversion method is a 15-page article. The description of the method is detailed, and the method of converting the appearance of the image between the methods is also described.

[Windows] [Python] Camera calibration of fisheye lens with OpenCV

** Which should I use, square grid or circle grid? **

In conclusion, it is better to use the circle grid. The reason is simple: the circle grid has a higher accuracy in calculating the coordinates of the feature points in step 2. Since an ellipse (including a circle) has the characteristic of being an ellipse even when viewed from an angle, the coordinates of the feature point can be obtained accurately by using the center of gravity as the feature point. On the other hand, in the case of a square grid, the feature point is the intersection of straight lines. When viewed from an angle, the shape of the square is distorted and the coordinates cannot be obtained stably.

[cv2.findCirclesGridDefault(image, patternSize[, centers[, flags]]) ] (http://docs.opencv.org/2.4/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html#findcirclesgrid)

garbage in garbage out Stereo matching is a measurement. Good results will not be obtained unless the image is taken in a state suitable for measurement. Align the brightness of the left and right cameras (set the auto gain off). Put the image in the proper range of brightness values (extremely bright, blacked out). Make the image have texture changes (avoid images that are too flat). Those things aren't programming, but you can't ignore them and get good results. I will repeat it. Stereo matching is a measurement. The quality of the input data for stereo matching changes depending on the conditions of the subject when shooting and the shooting conditions of the camera. No matter how good the program itself is, if the quality of the input data is poor, only bad results will be obtained. It is "garbage in garbage out".

** Understanding the stereo camera from the principle **

The understanding of stereo cameras is strongly influenced by the experiment of 3D measurement, which is a little different from other image processing and image recognition parts. Even those who are good at normal image processing can find it difficult to master the large number of arguments of a stereo matching function.

-[Computer Vision] Epipolar Geometry Learned with Cats Is easy to understand and recommended.

--How to calibrate camera with python + opencv → stereo matching → measure depth http://russeng.hatenablog.jp/entry/2015/07/02/080515

--Summary of multi-view restoration of 3D images and stereo vision http://qiita.com/sobeit@github/items/d419ea39b06e43e6200f

Is also written in an easy-to-understand manner.

slideshare Stefano Mattoccia「 Stereo Vision: Algorithms and Applications」 Stefano Mattoccia DEIS University of Bologna 214 pages Is a fairly detailed slide. It's hard to find such a detailed explanation in an image recognition book.

Semi-Global Matching with Jetson TK1

High-precision calibration of fisheye lens camera with strip pattern http://www.iim.cs.tut.ac.jp/~kanatani/papers/fisheyecalib.pdf

** Countermeasures against parallax error in the SAD algorithm when there is a difference in brightness between the left and right cameras **

It is a well-known fact that differences in brightness values worsen the results of stereo matching. As a countermeasure against that, it is considered to change the histogram. [Suppression for Luminance Difference of Stereo Image-Pair Based on Improved Histogram Equalization ] http://onlinepresent.org/proceedings/vol6_2012/17.pdf

Advantages of CENSUS conversion • Brightness values are not compared directly, so it is good for lighting fluctuations – Compared based on the relative relationship with the center • Noise resistant – Binary values are compared, so there is little influence of minute noise • Stable mapping in areas with few patterns – The effect of emphasizing the edge (pattern) due to the effect of difference from the center → differentiation. http://www.slideshare.net/FukushimaNorishige/popcnt

Postscript

Recommended Posts