Extract only the cat part from the cat image (matting / semantic segmentation)

Overview

From the cat image collected from AIX, I tried to extract only the cat part with as little effort as possible.

Foreground extraction

The process of separating the foreground and background from the image is called matting. It seems to be a major function in Photoshop, and in gimp, the function named "Foreground Extraction" corresponds to it. It seems that the general behavior is to divide the image into three types, "foreground", "background", and "either", and determine the boundary by looking at the image features in each area.

It seems difficult to automate this process, and in the scope of my research, I could only find a method called Deep Automatic Portrait Matting, which extracts only people from portrait photographs.

Apart from this, there was a paper on a method of automatically generating information (trimap) used for matting.

However, automatic matting is not always possible as long as an appropriate trimap can be generated. In fact, when processing with gimp etc., there are many situations where the foreground / background judgment is not classified as expected in small details and requires fine adjustment.

Semantic segmentation

As another approach, I considered using semantic segmentation. Semantic segmentation discriminates various objects on a pixel-by-pixel basis for a single input image.

In the previously written article, I wrote that ChainerCV also supports semantic segmentation, but the one prepared as a pre-learning model is CamVid. It is based on training data called dataset, and supports 11 classes such as sky, road, car, and pedestrian. There is no information about the cat I want to handle this time.

When I searched for other semantic segmentation implementations and datasets, I found that DilatedNet Keras Implementation was PASCAL VOC2011 challange. I distributed it including a trained model using a dataset based on the dataset of .ac.uk/pascal/VOC/voc2012/), so I decided to use it this time.

setup

You can use it by cloning the code, installing all the python modules that satisfy the requirements.txt dependency, and downloading the pre-learning model. It uses old Keras and TensorFlow backend (0.12.1), so it's a good idea to have a dedicated virtualenv environment.

$ pip install -r requirements.txt

$ curl -L https://github.com/nicolov/segmentation_keras/releases/download/model/nicolov_segmentation_model.tar.gz \

| tar xvf -

$ python predict.py --weights_path \

conversion/converted/dilation8_pascal_voc.npy \

images/cat.jpg

dilation8_pascal_voc.npy will be the pre-training model file. Processing the included images / cat.jpg will generate a file called images / cat_seg.png.

Applies to unknown images

In fact, let's use this model to process an unknown image. Unfortunately, this implementation has a limited size of the input image, and it seems that it can only be processed if the width and height are less than about 500. I resized the image to a size that meets that limit in advance, and then processed it.

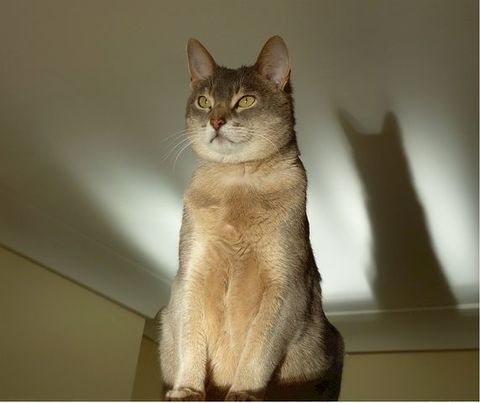

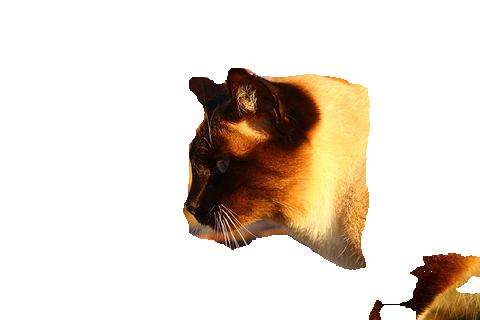

Image to be processed:

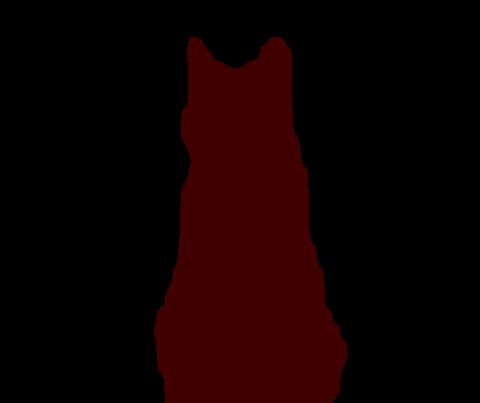

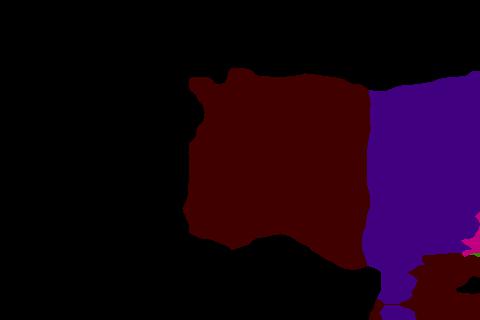

Segmentation output

The area identified as a cat image is identified by the color # 400000 (R: 0x40, G: 0x00, B: 0x00). At first glance it seems to be working. After that, while referring to each pixel of this image, it seems that the desired result can be obtained by filling the judged part other than the cat image from the original image.

However, if you look closely at the boundary part, it is not exactly divided exactly according to the numerical values.

For absolute value comparison

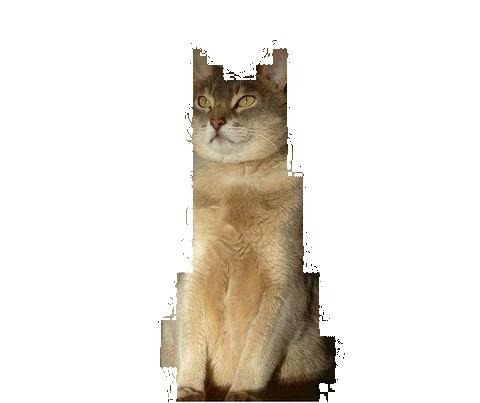

Since the values around the edges are slightly blurred in this way, if you simply compare the absolute values of the segmentation pixels to determine whether it is a foreground image, this part of the image will be missing.

See the norms between color spaces

As a result of various thoughts and trials, I used numpy's linalg.norm to calculate the distance between the color of the segmentation pixel and # 400000, and if it is within a certain number, it is determined that it is a cat image pixel. I did. The result of considering the range corresponding to 32, which is half of 64, as valid is as follows.

It feels good. The code to output this result is shown below.

# -*- coding: utf-8 -*-

import argparse

import os, sys

from PIL import Image

import numpy as np

def get_args():

p = argparse.ArgumentParser()

p.add_argument("--contents-dir", '-c', default=None)

p.add_argument("--segments-dir", '-s', default=None)

p.add_argument("--output-dir", '-o', default=None)

p.add_argument("--cat-label-vals", '-v', default="64,0,0")

args = p.parse_args()

if args.contents_dir is None or args.segments_dir is None or args.output_dir is None:

p.print_help()

sys.exit(1)

return args

def cat_col_array(val_str):

vals = val_str.split(',')

vals = [int(i) for i in vals]

return vals

def cmpary(a1, a2):

v1 = np.asarray(a1)

v2 = np.asarray(a2)

norm = np.linalg.norm(v1-v2)

if norm <= 32:

return True

return False

def make_images(cont_dir, seg_dir, out_dir, cat_vals):

files = []

for fname in os.listdir(cont_dir): # make target file list

seg_fname = os.path.join(seg_dir, fname)

if os.path.exists(seg_fname):

files.append(fname)

for fname in files:

print("processing: %s" % fname)

c_fname = os.path.join(cont_dir, fname)

cont = np.asarray(Image.open(c_fname)).copy()

s_fname = os.path.join(seg_dir, fname)

seg = np.asarray(Image.open(s_fname))

width, height, _ = seg.shape

for y in range(height):

for x in range(width):

vals = seg[x, y]

if not cmpary(vals, cat_vals):

cont[x, y] = [255, 255, 255]

out = Image.fromarray(cont)

o_fname = os.path.join(out_dir, fname)

out.save(o_fname)

def main():

args = get_args()

cat_vals = cat_col_array(args.cat_label_vals)

make_images(args.contents_dir, args.segments_dir, args.output_dir,

cat_vals)

if __name__ == '__main__':

main()

Task

When the model used this time was applied to all the images collected and selected from AIX, it did not always show 100% expected results. There are cases where it is judged that there are characteristics of any of the remaining 19 types other than cats.

Part of the torso is judged as a dog

When the following image was processed, a part of the body part was identified as a dog.

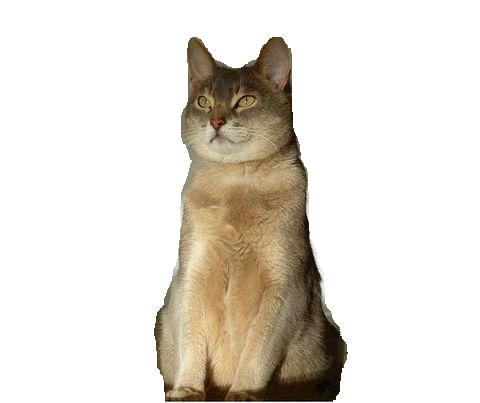

Violet-like color (# 400080) is the area identified as a dog. Certainly, I feel that dogs with this kind of color are relatively expensive. If you give this result to the above script, it will look like this:

Since the image prepared this time contains almost no dogs, it may be possible to leave the area judged to be a dog.

Treatment of black cats

Black cats are difficult to capture in photos in the first place, so it seems that problems are likely to occur in terms of discrimination.

The shadow part has become a part of the cat, and the body part that looks only black has disappeared.

data set

The URLs to the data targeted for segmentation / matting are listed on github this time.

- knok/pixabay-cat-images: Pixabay cat image URLs list

- pixabay-cat-images/classified-cat-jp-images.txt

1100 It will be a little image.

from now on

I think that pix2pix's edges2cats can be reproduced to some extent even with this level of data, so I will actually learn it.

Besides, I would like to read a paper to deepen my understanding of the structure of SegNet that can be used with DilatedNet and ChainerCV used this time. Also, I would like to try learning the training data used in the DilatedNet Keras implementation with ChainerCV's SegNet.

Recommended Posts