[Explanation for beginners] TensorFlow tutorial MNIST (for beginners)

TensorFlow beginner tutorial

I ran the TensorFlow Beginners Tutorial MNIST For ML Beginners. I'm a liberal arts graduate and ** TensorFlow for the first time **, and on the contrary ** Deep Learning for the first time **. Furthermore, ** I have hardly done machine learning **. For mathematical knowledge, please refer to the article How to study mathematics for working adults to understand statistics and machine learning. It is explained from the perspective of beginners. There are many parts that lack accuracy, but I give priority to clarity.

Reference link

-Installing TensorFlow on Windows was easy even for Python beginners -[Explanation for beginners] TensorFlow basic syntax and concept -TensorFlow API memo -[Introduction to TensorBoard] Visualize TensorFlow processing to deepen understanding -Visualize TensorFlow tutorial MNIST (for beginners) with TensorBoard -[Introduction to TensorBoard: image] TensorFlow Visualize image processing to deepen understanding -[Introduction to TensorBoard: Projector] Make TensorFlow processing look cool -[Explanation for beginners] TensorFlow Tutorial Deep MNIST -Yuki Kashiwagi's facial features to understand TensorFlow [Part 1]

Overview of MNIST For ML Beginners

The outline diagram is as follows.

1. Learning from images and correct answer data

MNIST database (Modified National Institute of Standards and Technology database) is a set of handwritten numerical images and correct data indicating which numerical values each image indicates. there is. We will read those data and learn what kind of image should be returned and what kind of numerical value it should be returned.

2. Model generation

As a result of "1. Learning from images and correct answer data", create a model formula. Internally, we will create a model with this image (0 is taken as an example).

3. Evaluation of learning results

Calculate how much the correct answer rate will be obtained by the generated model.

MNIST For ML Beginners execution procedure

Execution environment

The execution environment is as shown in the figure below. I'm running from a Jupyter Notebook.

1. Start Jupyter Notebook

Anaconda Navigator is launched from the Windows menu.

Then launch Jupyter Notebook from Anaconda Navigator.

Rename the file in Jupyter Notebook.

2. Tutorial execution

All you have to do is run the tutorial.

Shift + Enter key to execute the command (Enter key alone is just a line break).

The last "0.9178" is the evaluation of the learning result, which means that the correct answer rate is about 92%.

3.Computational Graph

When you output Computational Graph (calculation formula graph) with TensorBoard, it looks like this. For details, refer to the article "Visualization of TensorFlow Tutorial MNIST (for beginners) with TensorBoard".

The last "0.9178" is the evaluation of the learning result, which means that the correct answer rate is about 92%.

3.Computational Graph

When you output Computational Graph (calculation formula graph) with TensorBoard, it looks like this. For details, refer to the article "Visualization of TensorFlow Tutorial MNIST (for beginners) with TensorBoard".

Explanation of each command

0. Overview

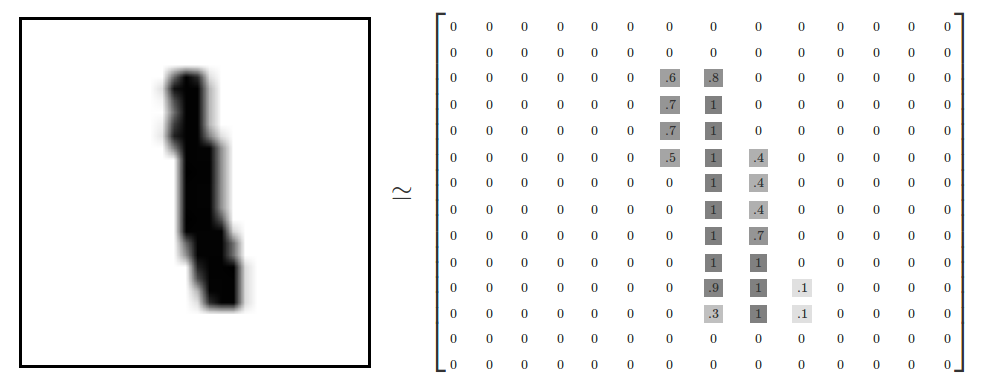

MNIST interprets handwritten numbers from 0 to 9 as 28-dot square data.

However, that would be complicated to explain in this article, so we will replace the two types of handwritten data, vertical bar (|) and horizontal bar (-), with 5-dot square data. Then, it looks like the figure below.

However, that would be complicated to explain in this article, so we will replace the two types of handwritten data, vertical bar (|) and horizontal bar (-), with 5-dot square data. Then, it looks like the figure below.

1. MNIST data import

Importing MINST data. By setting "one_hot = True", it means that if the data already exists locally, it will not be imported.

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

2. Load TensorFlow library

import tensorflow as tf

3. Model definition

Define three variables and a regression model. x: Variable to put an image of handwritten numerical value W: Variable to put the weighting value b: Variable to put the bias value

Explaining the above with a 5-dot square vertical and horizontal bar model, it looks like this (the bias value of b is an adjustment variable and is not included in the figure). The model is defined so that it can be evaluated by the value of $ x * W + b $. Think of "tf.nn.softmax" (softmax function) as a numerical conversion for evaluation.

x = tf.placeholder(tf.float32, [None, 784])

W = tf.Variable(tf.zeros([784, 10]))

b = tf.Variable(tf.zeros([10]))

y = tf.nn.softmax(tf.matmul(x, W) + b)

4. Definition of correct variable

Here, define the storage variable of the correct answer data (image xx is the numerical value xx).

y_ = tf.placeholder(tf.float32, [None, 10])

5. Definition of cross entropy

Definition of cross entropy (cross entropy). Cross entropy is the difference between the predicted and actual values.

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y_ * tf.log(y), reduction_indices=[1]))

6. Definition of training method

Train using the "tf.train.Gradient Descent Optimizer".

train_step = tf.train.GradientDescentOptimizer(0.5).minimize(cross_entropy)

7. Session generation and variable initialization

sess = tf.InteractiveSession()

tf.global_variables_initializer().run()

8. Training execution

Repeat the training 1000 times using 100 random data.

for _ in range(1000):

batch_xs, batch_ys = mnist.train.next_batch(100)

sess.run(train_step, feed_dict={x: batch_xs, y_:batch_ys})

9. Evaluation

Here, the predicted value and the correct answer are matched. correct_prediction holds data as True / False. tf.argmax is to extract the largest value in the data (correct numerical value). Use tf.argmax to output the number with the highest probability (evaluation value y).

correct_prediction = tf.equal(tf.argmax(y,1),tf.argmax(y_,1))

10. Evaluation calculation and output

The correct answer rate is calculated by changing the True / False data to 1 or 0 with tf.cast.

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

print(sess.run(accuracy, feed_dict={x: mnist.test.images, y_:mnist.test.labels}))

Recommended Posts