Shortening the analysis time of Openpose using sound

Shortening the analysis time of Openpose using sound

Openpose that analyzes the posture of a person, and shortened this analysis time by using sound. I would like to analyze the boxing form using this method.

Execution environment

Machine: GoogoleColab

Openpose: I am using the following source code to get the key points. When doing this, it's a good idea to clone it from git to Drive using the techniques described here. https://colab.research.google.com/drive/1VO4TGJHKXnRKgiSAsNEeQcEHRgzUFFv9

Current issues

It takes time to analyze the video. I tried to analyze a 7-minute mitt-beating video with Openpose, but even using Google Colab, it took an hour and a half to analyze for 1 minute. It takes 10 and a half hours to analyze everything, and it needs to be shortened considering the runtime and power consumption.

Solution

Extract only the scene you want to use the sound (the moment you hit the punch and when you hit it) and recreate the video. Since the video time has been shortened, the execution time will also be shortened by analyzing it.

I will explain the continuation with the source code.

Googole Drive mount

You can quote the data on Drive from Google Colab with the code below. ``` from google.colab import drive drive.mount('/content/drive') ```

Generate audio file from video

Import required libraries ``` import shutil !pip3 install pydub from pydub import AudioSegment ```

Declare the path required to read and output the video file ``` #The name of the video file to analyze onlyFileName = "box1911252" #Video extension+Add with filename = onlyFileName + ".mov" #Declare the folder containing the executable file foldapath = '/content/drive/My Drive/Colab Notebooks/openpose_movie/openpose/' #Copy file shutil.copy(foldapath + filename, "output.mp4") #Declare the location of the video file to analyze filepath = '/content/drive/My Drive/Colab Notebooks/openpose_movie/openpose/'+filename #Path to save the image of the cut out video based on the sound data filepath = foldapath + filename savepath = foldapath + '/resultSound/'+onlyFileName ```

Cut out an audio file from a video file ``` #Video to audio conversion !ffmpeg -i output.mp4 output.mp3 #Declare the path of the sound file filepathSound = './output.mp3' #Put sound data in sound sound = AudioSegment.from_file(filepathSound, "mp3") ```

Get the number of frames in the video

In order to detect the punch timing in the sound data, it is necessary to link the sound with the corresponding frame. However, the number of sound data and the number of frames are not the same even in the same video. For example, in the case of a video of about 2 minutes, the number of sound data is 3480575 but the number of frames is 2368. Therefore, in order to divide the sound data by the number of frames and extract the sound corresponding to that frame, it is necessary to acquire the number of frames at this timing. ``` import cv2 import os def save_all_frames(video_path, dir_path, ext='jpg'): cap = cv2.VideoCapture(video_path) if not cap.isOpened(): return os.makedirs(dir_path, exist_ok=True) digit = len(str(int(cap.get(cv2.CAP_PROP_FRAME_COUNT)))) n = 0 print(digit) while True: ret, frame = cap.read() if ret : #cv2.imwrite('{}_{}.{}'.format(base_path, str(n).zfill(digit), ext), frame) n += 1 else: print(n) frameLength = n return frameLength frameLength = save_all_frames(filepath, 'sample_video_img') ```

Output the waveform of sound data

Output the sound data waveform collected here, check it, and then determine the hyperparameters. I was worried about automating it, but I decided to visually check it and decide the parameters to give it more freedom.

First, output the waveform with the following code ``` ## Input import numpy as np import matplotlib.pyplot as plt import pandas as pd samples = np.array(sound.get_array_of_samples()) sample = samples[::sound.channels] plt.plot(sample) plt.show() df = pd.DataFrame(sample) print(df) max_span = [] #frameLength contains the total number of frames of the video detected in the previous cell len_frame = frameLength span = len_frame for i in range(span): max_span.append(df[int(len(df)/span)*i:int(len(df)/span)*(i+1)-1][0].max()) df_max = pd.DataFrame(max_span) ```

You can see the waveform like this

This waveform is temporarily enlarged at the timing of punching. Since the value that is partially increased is about 10000Hz or more, this is used as a hyperparameter. Set hyperparameters with the code below, and save the timing beyond that in an array by associating it with the frame number.

#df_max is the loudness of the sound when divided into equal parts, which will be used later.

print(df_max)

punchCount = []

soundSize = 10000

for i in range(len(df_max)):

if df_max.iat[i, 0] > soundSize: #Hyperparameters

#print(i,df_max.iat[i, 0])

punchCount.append([i,df_max.iat[i, 0]])

df_punchCount = pd.DataFrame(punchCount)

print(df_punchCount)

Generate extracted video

Based on the above processing, the punch timing is output as a moving image. ``` #Save the frame when the specified volume or higher is detected import cv2 import os

def save_all_frames(video_path, dir_path, basename, ext='jpg'): cap = cv2.VideoCapture(video_path)

if not cap.isOpened(): return

os.makedirs(dir_path, exist_ok=True) base_path = os.path.join(dir_path, basename)

digit = len(str(int(cap.get(cv2.CAP_PROP_FRAME_COUNT)))) frame = []

n = 0 #Avoid detecting loud sounds in a row slipCount = 0

#Save path for the output video file fourcc = cv2.VideoWriter_fourcc('m','p','4','v') video = cv2.VideoWriter('/content/drive/My Drive/data/movies/'+onlyFileName+'.mp4', fourcc, 20.0, (960, 540)) #Save path for video files for analysis videoAnalize = cv2.VideoWriter('/content/drive/My Drive/data/movies/'+onlyFileName+'Analize.mp4', fourcc, 20.0, (960, 540)) #Specify how many frames to output to the video numFrames=10

#while True: for i in range(frameLength): ret, a_frame = cap.read() if ret : n += 1 #Delete so that the memory does not increase too much if n > 15: frame[n-15] = [] #Not required as multiple outputs are not possible frame.append(a_frame) if n < len(df_max) and df_max.iat[n,0] > soundSize and slipCount==0: slipCount = 12 pre_name = "box"+str(n)+"-" cv2.imwrite('{}{}.{}'.format(base_path,pre_name+str(n-10).zfill(digit), ext), frame[n-10]) cv2.imwrite('{}{}.{}'.format(base_path,pre_name+str(n-2).zfill(digit), ext), frame[n-2]) #I get an error because n is the length of the array for m in range(numFrames): img = frame[n+m-numFrames] img = cv2.resize(img, (960,540)) print(img) video.write(img) if m == 0 or m>6: videoAnalize.write(img) elif slipCount > 0: slipCount -= 1 else: video.release() print(n) return

save_all_frames(filepath, savepath, 'samplePunch_img')

<p>

With the above, we were able to generate a video that extracted the punch timing.

The generated videos can be found in My Drive / data / movies.

Colab does not create folders, so if you don't have one, you'll have to create one yourself. </ p>

<h2> Analysis with Openpose </ h2>

<p> Basically, we will use the video analysis method here. Since the details are written in this URL, only the execution command is described. </ p>

<a>https://colab.research.google.com/drive/1VO4TGJHKXnRKgiSAsNEeQcEHRgzUFFv9</a>

!git clone --recursive https://github.com/hamataro0710/kempo_motion_analysis

cd /content/kempo_motion_analysis

!git pull --tags !git checkout refs/tags/track_v1.0

!bash start.sh

!python estimate_video.py --path='/content/drive/My Drive/data/' --video={onlyFileName}"Analize.mp4"

<p> This completes the analysis of the OpenPose video. You can check the video from the link below. </ p>

<a>https://www.youtube.com/embed/BUIfu-QD8x8</a>

<h2> Result </ h2>

<p> Depending on the value of hyperparameters and the number of extracted frames, the execution time has changed from 10 hours to 1 hour to 30 minutes. </ p>

<h2> Challenges </ h2>

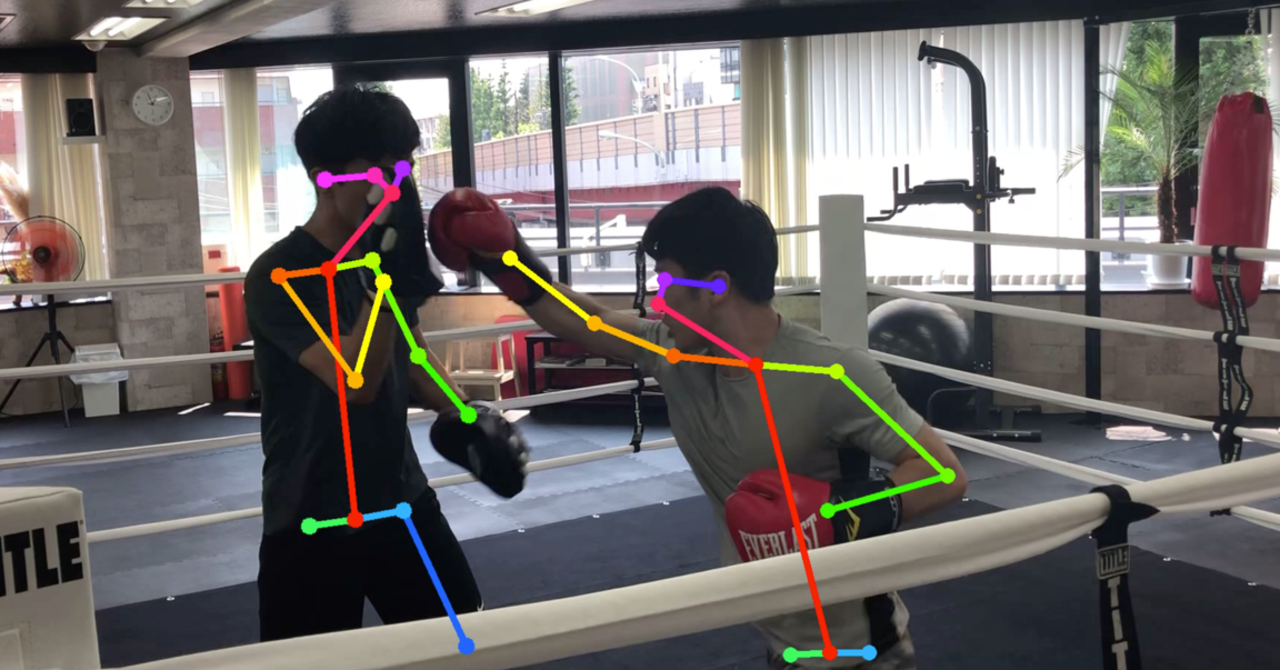

<p> By using this, you can get the coordinates of each part of the body in chronological order.

However, when multiple people are shown, there is a problem that it is not possible to know who is associated with which data, so it is necessary to make a judgment using YOLO or the like. </ p>

<h2> Extra edition </ h2>

<p> However, since one person can analyze normally, I used this method to analyze the punching form of boxing. </ p>

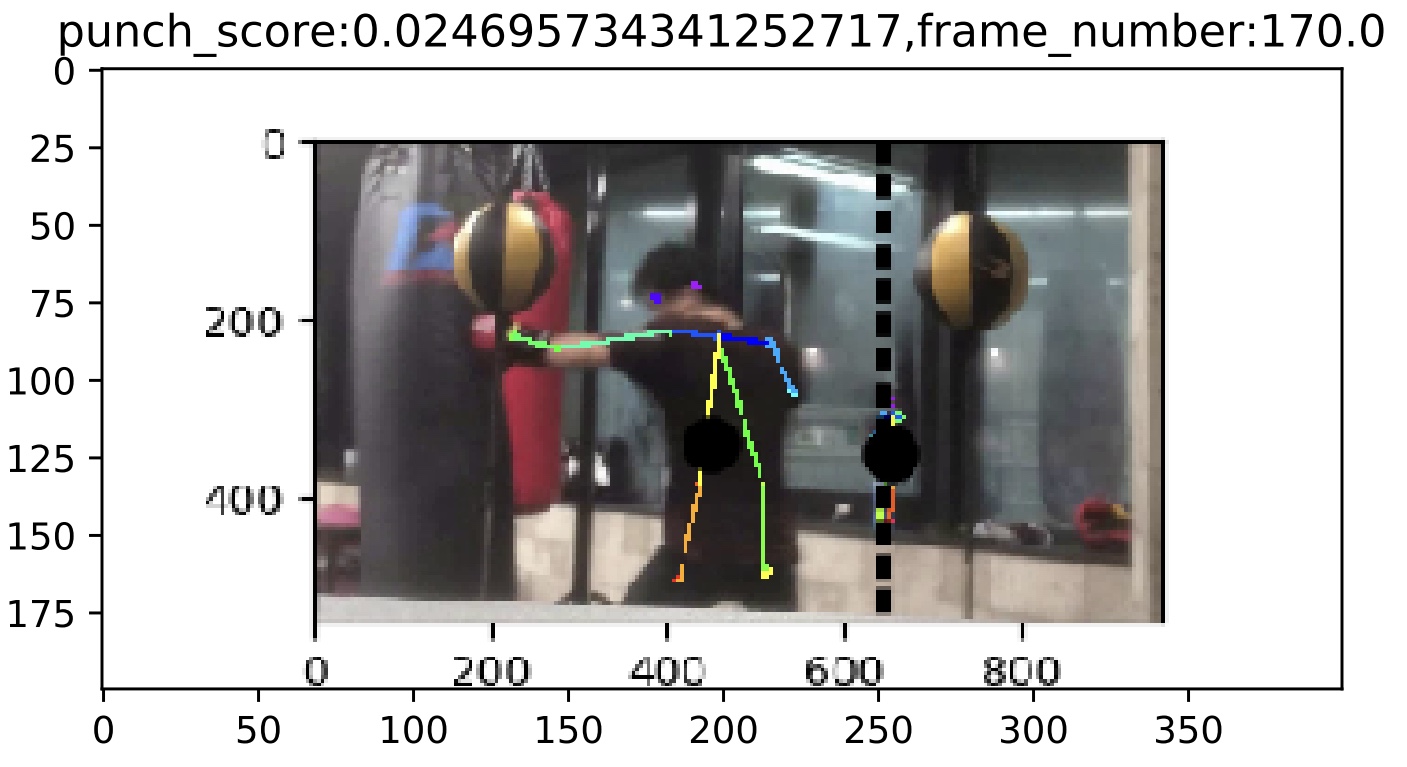

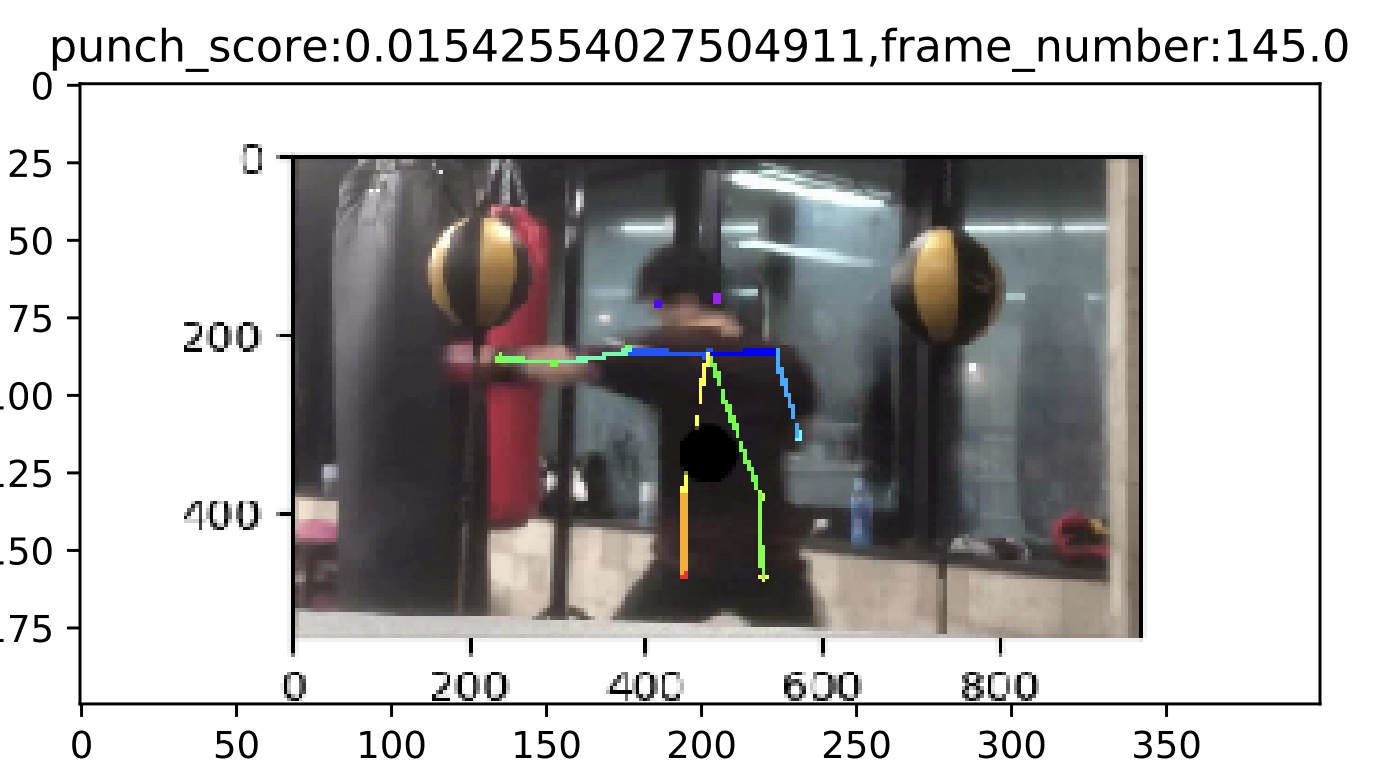

<p> A good boxing punch has your elbows stretched out when you hit. Therefore, I used Openpose to analyze what the punch was stretched out at the moment of the boxing punch and what wasn't stretched out, and took pictures to compare them. </ p>

<p> An example of a punch where the arm is not fully extended </ p>

<p> An example of a punch with full arms </ p>

<p> When comparing the above examples, the punch with the arms fully extended has horizontal shoulders and a straight line from both shoulders to the hands. On the other hand, if the punch is not fully extended, one shoulder is raised and the line from the shoulder to the effort is not straight. From this, it is thought that you will be able to hit a good punch by being aware of turning your shoulders rather than putting your hands out. </ p>

<p> In the future, I would like to increase the data and calculate the correlation coefficient to confirm the hypothesis, and analyze the data using various methods. </ p>

<h2> Finally </ h2>

<p> I'm sorry for all the fucking code m (_ _) m </ p>

Recommended Posts