Take a peek at the processing of LightGBM Tuner

Introduction

Announcement at PyData.Tokyo at the end of September last year ? ref = https://pydatatokyo.connpass.com/event/141272/presentation/) The LightGBMTuner introduced in) has finally been implemented.

-[Automatic optimization of hyperparameters by LightGBM Tuner, an extension of Optuna \ | Preferred Networks Research & Development](https://tech.preferred.jp/ja/blog/hyperparameter-tuning-with-optuna-integration-lightgbm-tuner /)

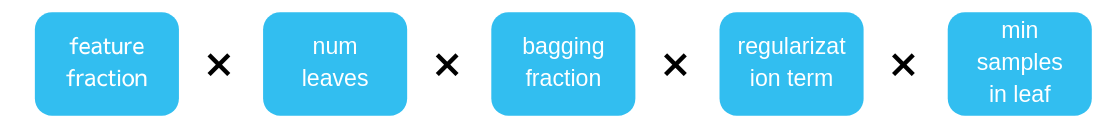

Not limited to LightGBM, model parameters are not independent but interact with each other. Therefore, higher accuracy can be expected by tuning each parameter step by step at the time of parameter tuning. The concept is to tune in order from the parameters that have the greatest influence (which seems to be).

Confirmation environment

- Windows10

- Python 3.7

- optuna 1.0.0

Processing content

The implementation is here

The implementation is here

| No. | Contents | Method name | Parameter name | Tuning range | Number of trials |

|---|---|---|---|---|---|

| 1 | feature_fraction(First time) | tune_feature_fraction() | feature_fraction | 0.4~1.0 | 7 times |

| 2 | num leaves | tune_feature_fraction() | num leaves | 0~1(optuna.samplers.Use TPESampler) | 20 times |

| 3 | bagging | tune_bagging() | bagging_fraction, bagging_freq | 0~1(optuna.samplers.Use TPESampler) | 10 times |

| 4 | feature_fraction(Second time) | tune_feature_fraction_stage2() | feature_fraction | First optimum value ± 0.Range of 08 (0.4~1.Excluding values outside the 0 range) | 3~6 times |

| 5 | regularization | tune_regularization_factors() | lambda_l1, lambda_l2 | 0~1(optuna.samplers.Use TPESampler) | 20 times |

| 6 | min data in leaf | tune_min_data_in_leaf() | min_child_samples | 5, 10, 25, 50, 100 | 5 times |

The points I was interested in are as follows.

--What is the effect of tuning feature_fraction in two steps? --Is it feature_fraction before num leaves? --The tuning of lambda_l1 and lambda_l2 is narrowed to 0 to 1 (I used to take the maximum value of 100, but is it too wide?)

If you want to change the tuning content to your liking, you currently need to implement run () with a monkey patch.

Supplement

If you don't understand the meaning of the parameters, start by understanding your feelings at the link below. [Feelings of important parameters in gradient boosting -nykergoto's blog](https://nykergoto.hatenablog.jp/entry/2019/03/29/%E5%8B%BE%E9%85%8D%E3%83 % 96% E3% 83% BC% E3% 82% B9% E3% 83% 86% E3% 82% A3% E3% 83% B3% E3% 82% B0% E3% 81% A7% E5% A4% A7 % E4% BA% 8B% E3% 81% AA% E3% 83% 91% E3% 83% A9% E3% 83% A1% E3% 83% BC% E3% 82% BF% E3% 81% AE% E6 % B0% 97% E6% 8C% 81% E3% 81% A1)

Recommended Posts