Face detection with Haar Cascades

Based on py_face_detection.markdown In "OpenCV-Python Tutorials" Japanese translation [Face Detection using Haar Cascades] (http://docs.opencv.org/3.0-beta/doc/py_tutorials/py_objdetect/py_face_detection/py_face_detection.html#face-detection) I made a translation of. The Japanese translation is added after the original English. I hope it helps you to be interested in the algorithm.

The following page is up, so I don't need my crappy translation anymore.

OpenCV-Python tutorial document page in Tottori University [Face detection using Haar Cascades] (http://labs.eecs.tottori-u.ac.jp/sd/Member/oyamada/OpenCV/html/py_tutorials/py_objdetect/py_face_detection/py_face_detection.html#face-detection)

Face Detection using Haar Cascades {#tutorial_py_face_detection} Face detection with Haar Cascades

Goal

In this session,

- We will see the basics of face detection using Haar Feature-based Cascade Classifiers

- We will extend the same for eye detection etc.

In this section,

--Learn the basics of face detection using the Cascade classifier based on Haar features. ――It is extended and used for eye detection.

Basics

Object Detection using Haar feature-based cascade classifiers is an effective object detection method proposed by Paul Viola and Michael Jones in their paper, "Rapid Object Detection using a Boosted Cascade of Simple Features" in 2001. It is a machine learning based approach where a cascade function is trained from a lot of positive and negative images. It is then used to detect objects in other images. Object detection using the Cascade discriminator based on Haar features is an efficient object detection method, described by Paul Viola and Michael Jones as "Rapid Object Detection using a". Proposed in a paper in Boosted Cascade of Simple Features "(2001). The technique is a machine learning-based approach, in which each cascade classifier is trained from a large number of positive and negative images. After training, it is used to detect objects in other images.

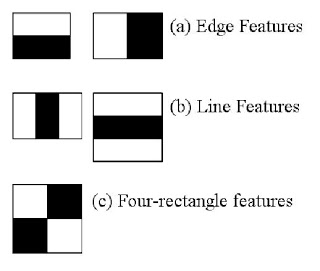

Here we will work with face detection. Initially, the algorithm needs a lot of positive images (images of faces) and negative images (images without faces) to train the classifier. Then we need to extract features from it. For this, haar features shown in below image are used. They are just like our convolutional kernel. Each feature is a single value obtained by subtracting sum of pixels under white rectangle from sum of pixels under black rectangle.

Now let's get started with face detection. First, the algorithm uses a number of positive and negative images (faceless images) to train the classifier. Next, we need to extract the features from the image. For this purpose, the Haar features shown in the figure below are used. Haar features are like a convolution kernel. Each feature is a single number, which is the sum of the brightness of the pixels at the black rectangle minus the total brightness of the pixels at the white rectangle.

Now all possible sizes and locations of each kernel is used to calculate plenty of features. (Just imagine how much computation it needs? Even a 24x24 window results over 160000 features). For each feature calculation, we need to find sum of pixels under white and black rectangles. To solve this, they introduced the integral images. It simplifies calculation of sum of pixels, how large may be the number of pixels, to an operation involving just four pixels. Nice, isn't it? It makes things super-fast.

At this time, all the possible sizes and positions of each kernel are used to calculate many features. (Imagine how many calculations are needed. Even a 24x24 pixel window has more than 16000 features.) To solve this problem, they introduced an integral image. By using the integrated image, it is easy to calculate the total pixel value. No matter how many pixels there are, we are replacing it with an operation that uses the values of four pixels (in the integrated image). It would be nice. By using the integrated image, it is very fast.

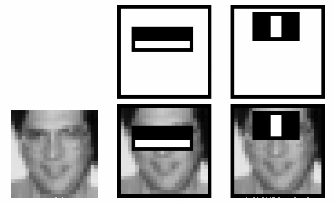

But among all these features we calculated, most of them are irrelevant. For example, consider the image below. Top row shows two good features. The first feature selected seems to focus on the property that the region of the eyes is often darker than the region of the nose and cheeks. The second feature selected relies on the property that the eyes are darker than the bridge of the nose. But the same windows applying on cheeks or any other place is irrelevant. So how do we select the best features out of 160000+ features? It is achieved by Adaboost.

However, most of these calculated features are irrelevant (for face identification). See the figure below for an example. The top row shows two good features. The first selected features appear to focus on the characteristic that the eye area tends to be darker than the nose and cheek areas. The second feature selected depends on the characteristic that the area of both eyes is darker than the nasal muscles. But even in the same window, the one used on the cheek or elsewhere is irrelevant (to distinguish whether it is a face or not). So how do you select the optimal multiple features from over 16000 features? It was achieved by ** AdaBoost **.

For this, we apply each and every feature on all the training images. For each feature, it finds the best threshold which will classify the faces to positive and negative. But obviously, there will be errors or misclassifications. We select the features with minimum error rate, which means they are the features that best classifies the face and non-face images. (The process is not as simple as this. Each image is given an equal weight in the beginning. After each classification, weights of misclassified images are increased. Then again same process is done. New error rates are calculated. Also new weights. The process is continued until required accuracy or error rate is achieved or required number of features are found).

This applies each feature to all training images. For each feature, find the best threshold to classify face candidates into positive and negative. But as you can see, there are errors and misclassifications. Select the feature with the lowest error rate. In other words, it is a feature that best classifies facial and non-face images. (The actual process is not as easy as described here. Initially all images are given the same weight. After each classification, the misclassified image is weighted. This process is the required accuracy. It repeats until the error rate is achieved or the feature quantity of the required number is found.)

Final classifier is a weighted sum of these weak classifiers. It is called weak because it alone can't classify the image, but together with others forms a strong classifier. The paper says even 200 features provide detection with 95% accuracy. Their final setup had around 6000 features. (Imagine a reduction from 160000+ features to 6000 features. That is a big gain).

The last discriminator is obtained as the weighting of these weak discriminators. It is called a weak classifier because it cannot classify images on its own, but it is combined with several other weak classifiers to form a strong classifier. According to the paper, even 200 features enable detection with 95% accuracy. Their final configuration uses about 6000 features. (Take into account that the number of features has been reduced from over 160,000 to 6000. It will have decreased considerably.)

So now you take an image. Take each 24x24 window. Apply 6000 features to it. Check if it is face or not. Wow.. Wow.. Isn't it a little inefficient and time consuming? Yes, it is. Authors have a good solution for that.

So I will prepare one image now. Apply each 24x24 pixel window. Apply 6000 features to the window. Check if it is face or non-face. Oh, are you a little ridiculous and inefficient and wasting your time? That's right. The authors have a good solution to that.

In an image, most of the image region is non-face region. So it is a better idea to have a simple method to check if a window is not a face region. If it is not, discard it in a single shot. Don't process it again. Instead focus on region where there can be a face. This way, we can find more time to check a possible face region.

In a single image, most areas of the image are non-face areas. So it's a good idea to have an easy way to make sure the window area is not the face area. If the window area is not the face area, throw it away immediately. It will not be processed again (with a strong discriminator). Instead, focus on areas that may be faces. In this way, you can spend more time checking for possible facial areas.

For this they introduced the concept of Cascade of Classifiers. Instead of applying all the 6000 features on a window, group the features into different stages of classifiers and apply one-by-one. (Normally first few stages will contain very less number of features). If a window fails the first stage, discard it. We don't consider remaining features on it. If it passes, apply the second stage of features and continue the process. The window which passes all stages is a face region. How is the plan !!!

About this, they introduced the concept of ** cascade classifier **. Instead of applying all of the 6000 features to one window area, the set of features is grouped into classifiers belonging to different stages and applied one by one. (Usually the first few stages will contain a very small number of features.)

If you fail on the first stage in a window area. Discard that window area. It does not consider the remaining features in that area. If it passes in the first stage, apply the second feature group and continue processing. The window area passed through all stages is the face area.

Authors' detector had 6000+ features with 38 stages with 1, 10, 25, 25 and 50 features in first five stages. (Two features in the above image is actually obtained as the best two features from Adaboost). According to authors, on an average, 10 features out of 6000+ are evaluated per sub-window.

The detector by the author is a 38-stage classifier with more than 6000 features, with 1, 10, 25, 25, 50 features in the first 5 stages. (The two features in the figure above were actually obtained by Adaboost as the two best features.) According to the author, on average, 10 out of 6000 or more per sub-window. Features are evaluated ・

So this is a simple intuitive explanation of how Viola-Jones face detection works. Read paper for more details or check out the references in Additional Resources section. Here's a quick and intuitive explanation of how Viola-Jones face detection works. For more information, read the paper or refer to the reference information provided in the Additional Resources section.

Haar-cascade Detection in OpenCV Haar-cascade detection in OpenCV

OpenCV comes with a trainer as well as detector. If you want to train your own classifier for any object like car, planes etc. you can use OpenCV to create one. Its full details are given here: Cascade Classifier Training.

OpenCV has a trainer as well as a detector. If you want to train (learn) your own classifiers for objects such as cars and planes, you can use OpenCV for that. The details can be found here. Learning Cascade Detector.

Here we will deal with detection. OpenCV already contains many pre-trained classifiers for face, eyes, smile etc. Those XML files are stored in opencv/data/haarcascades/ folder. Let's create face and eye detector with OpenCV.

Now let's detect it. OpenCV already includes trained detectors such as face detection, eye detection and smile detection. Those XMLs are stored in the opencv / data / haarcascades / folder. Let's make an OpenCV face and eye detector.

First we need to load the required XML classifiers. Then load our input image (or video) in grayscale mode.

First, you need to read the given XML classifier. Then read the input image (or video) in grayscale mode.

python

import numpy as np

import cv2

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

eye_cascade = cv2.CascadeClassifier('haarcascade_eye.xml')

img = cv2.imread('sachin.jpg')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

Now we find the faces in the image. If faces are found, it returns the positions of detected faces as Rect(x,y,w,h). Once we get these locations, we can create a ROI for the face and apply eye detection on this ROI (since eyes are always on the face !!! ).

Now find the face in the image. If a face is found, the position of each face detected is returned by Rect (x, y, w, h). As soon as these locations are found, you can set the ROI for the face and apply eye detection to this ROI. (Because my eyes are always on my face)

python

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

for (x,y,w,h) in faces:

cv2.rectangle(img,(x,y),(x+w,y+h),(255,0,0),2)

roi_gray = gray[y:y+h, x:x+w]

roi_color = img[y:y+h, x:x+w]

eyes = eye_cascade.detectMultiScale(roi_gray)

for (ex,ey,ew,eh) in eyes:

cv2.rectangle(roi_color,(ex,ey),(ex+ew,ey+eh),(0,255,0),2)

cv2.imshow('img',img)

cv2.waitKey(0)

cv2.destroyAllWindows()

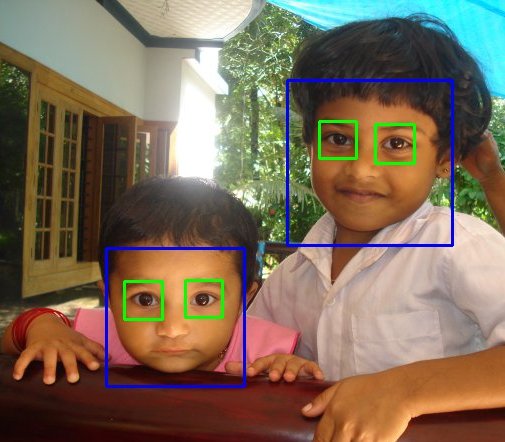

Result looks like below: The result is as follows:

Additional Resources

-# Video Lecture on Face Detection and Tracking 2. An interesting interview regarding Face Detection by Adam Harvey

Exercises

Recommended Posts