Introduction to Scrapy (1)

Introduction to Scrapy (1)

Introduction

There are various approaches to web scraping in Python. In this article, we'll take Scrapy, a framework for scraping, as a subject and learn about Scrapy while actually creating a simple sample.

What is Scrapy

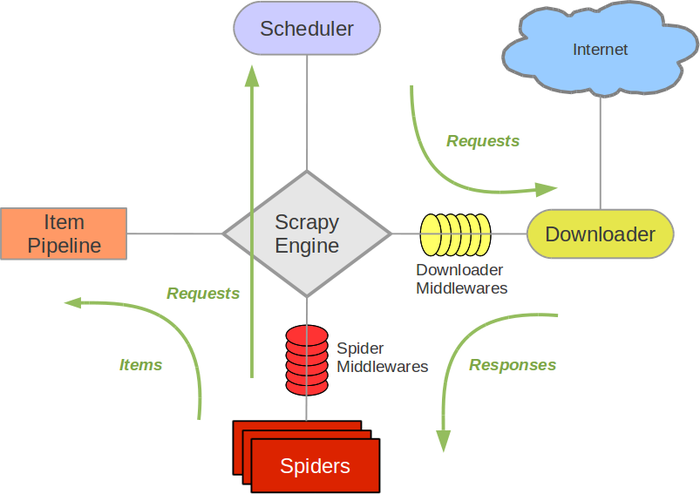

Scarpy is a fast, high-level scraping framework. It has various functions related to website crawling and scraping. The main functions are divided into components, and the user creates a program by creating classes related to each component.

From http://doc.scrapy.org/en/1.0/topics/architecture.html

From http://doc.scrapy.org/en/1.0/topics/architecture.html

The main components are:

Scrapy Engine

- Responsible for controlling data flow between components

- Fire an event when a particular action occurs

Scheduler

- Receives requests from Engine and is responsible for queuing and scheduling

Downloader

- Responsible for the actual download process

- It is possible to insert the process with Download middleware

Spiders

- User-created custom class

- Describe the URL you want to get and the part you want to extract

- Scrap the downloaded content to create an Item

Item Pipeline

- Output processing of items extracted by spider

- Data cleansing and validation

- Persistence (JSON, File, DB, Mail)

As you can see, Scrapy has various functions. This time, first create Spider, which is the basic concept of Scrapy, I will write a program to get the URL posted on the Advent Calendar on Qiita.

Installation

First, install with pip.

pip install scrapy

Creating a Spider

Next, create Spider, which is one of the components. Spider has a URL endpoint to start the crawl process, Describe the process for extracting the URL.

# -*- coding: utf-8 -*-

import scrapy

class QiitaSpider(scrapy.Spider):

name = 'qiita_spider'

#Endpoint (list the URL to start crawling)

start_urls = ['http://qiita.com/advent-calendar/2015/categories/programming_languages']

custom_settings = {

"DOWNLOAD_DELAY": 1,

}

#Describe the URL extraction process

def parse(self, response):

for href in response.css('.adventCalendarList .adventCalendarList_calendarTitle > a::attr(href)'):

full_url = response.urljoin(href.extract())

#Create a Request based on the extracted URL and download it

yield scrapy.Request(full_url, callback=self.parse_item)

#Create an Item to extract and save the contents based on the downloaded page

def parse_item(self, response):

urls = []

for href in response.css('.adventCalendarItem_entry > a::attr(href)'):

full_url = response.urljoin(href.extract())

urls.append(full_url)

yield {

'title': response.css('h1::text').extract(),

'urls': urls,

}

Run

Scrapy comes with a lot of commands. This time to run Spider Run Spider using the runspider command. You can use the -o option to save the result created by parse_item to a file in JSON format.

scrapy runspider qiita_spider.py -o advent_calendar.json

result

The execution result is as follows. I was able to get a list of the titles and posted URLs of each Advent calendar!

{

"urls": [

"http://loboskobayashi.github.io/pythonsf/2015/12-01/recomending_PythonSf_one-liners_for_general_python_codes/",

"http://qiita.com/csakatoku/items/444db2d0e421265ec106",

"http://qiita.com/csakatoku/items/86904adaa0922e80069c",

"http://qiita.com/Hironsan/items/fb6ee6b8e0291a7e5a1d",

"http://qiita.com/csakatoku/items/77a36888d0851f6d4ff0",

"http://qiita.com/csakatoku/items/a5b6c3604c0d411154fa",

"http://qiita.com/sharow/items/21e421a2a40275ee9bb8",

"http://qiita.com/Lspeciosum/items/d248b64d1bdcb8e39d32",

"http://qiita.com/CS_Toku/items/353fd4b0fd9ed17dc152",

"http://hideharaaws.hatenablog.com/entry/django-upgrade-16to18",

"http://qiita.com/FGtatsuro/items/0efebb9b58374d16c5f0",

"http://shinyorke.hatenablog.com/entry/2015/12/12/143121",

"http://qiita.com/wrist/items/5759f894303e4364ebfd",

"http://qiita.com/kimihiro_n/items/86e0a9e619720e57ecd8",

"http://qiita.com/satoshi03/items/ae616dc080d085604b06",

"http://qiita.com/CS_Toku/items/32028e65a8bfa97266d6",

"http://fx-kirin.com/python/nfp-linear-reggression-model/"

],

"title": [

"Python \u305d\u306e2 Advent Calendar 2015"

]

},

{

"urls": [

"http://qiita.com/nasa9084/items/40f223b5b44f13ef2925",

"http://studio3104.hatenablog.com/entry/2015/12/02/120957",

"http://qiita.com/Tsutomu-KKE@github/items/29414e2d4f30b2bc94ae",

"http://qiita.com/icoxfog417/items/913bb815d8d419148c33",

"http://qiita.com/sakamotomsh/items/ab6c15b971587905ef43",

"http://cocu.hatenablog.com/entry/2015/12/06/022100",

"http://qiita.com/masashi127/items/ca092c13e1300f4f6ade",

"http://qiita.com/kaneshin/items/269bc5f156d86f8a91c4",

"http://qiita.com/wh11e7rue/items/15603e8970c36ab9733d",

"http://qiita.com/ohkawa/items/368e6bcadc56a118adaf",

"http://qiita.com/teitei_tk/items/5c5c9e653b3a13108d12",

"http://sinhrks.hatenablog.com/entry/2015/12/13/215858"

],

"title": [

"Python Advent Calendar 2015"

]

},

At the end

This time, I created Spider, which is a basic component of Scrapy, and performed scraping. If you use Scrapy, the framework will take care of the routine processing related to crawling. Therefore, developers can describe and develop only the parts that are really necessary for services and applications, such as URL extraction processing and data storage processing. From the next time onwards, we will cover cache and save processing. looking forward to!

Recommended Posts